Technology permeates every aspect of our lives — shaping our thoughts, behaviours and choices. Digital advancements have brought us numerous benefits. However, it’s time for us to open our eyes to the threats they pose as well — in particular, to our ability to think what we wish. Our freedom of thought itself is at risk.

It’s difficult to believe, in 2024, that there was a time when cigarettes were widely considered harmless or even glamorous. Big Tobacco fought hard to manipulate consumers and push back on critics. It took a 1964 US Surgeon General’s report, followed by years of research and government intervention, to alert the public to the health risks. Eventually, policy makers put appropriate regulations for the industry into place.

Why aren’t we confronting big tech in the same way? Why aren’t we taking a similar approach to digital harms? They may be harder to recognize, but they are every bit as pervasive and dangerous as the physical harms regulators worked to mitigate in previous eras.

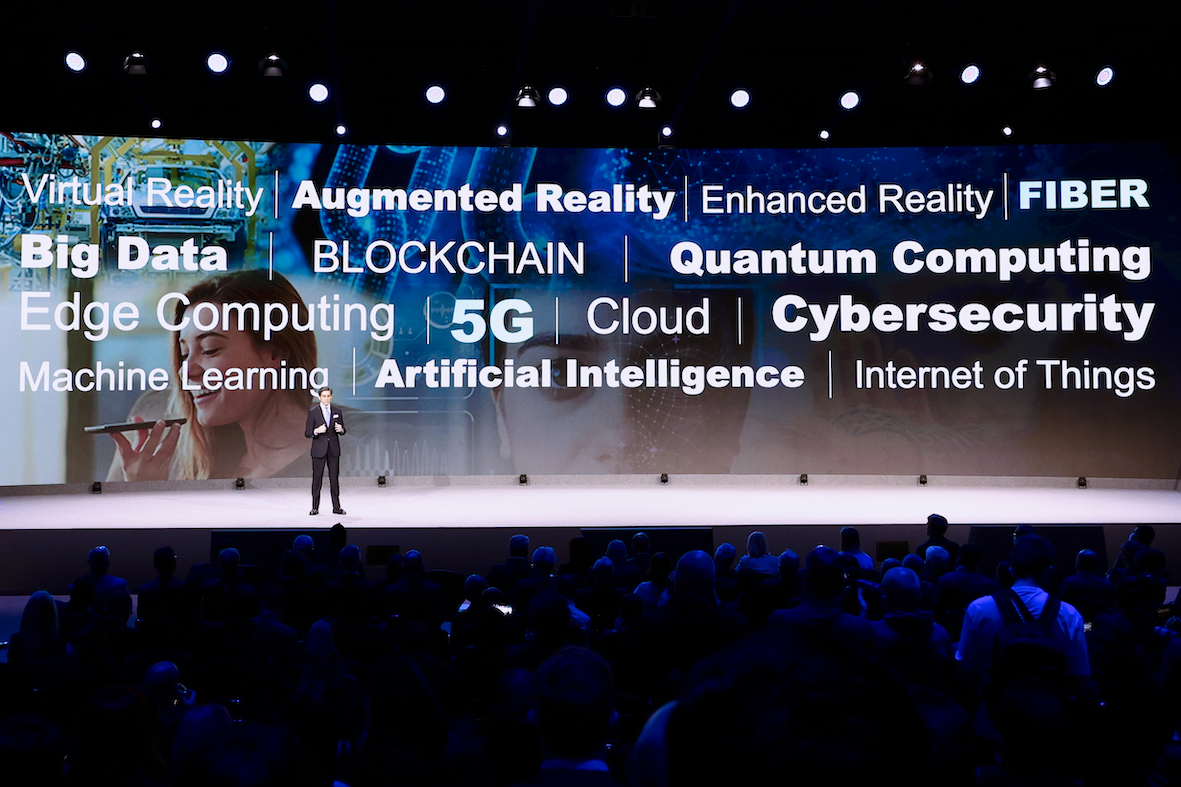

The confluence of social media, generative artificial intelligence (AI), workplace productivity tools, virtual reality and soon-to-emerge human-machine interfaces has the potential to manipulate our minds as never before — exploiting our biases and nudging us toward the desired outcomes of those controlling these technologies. Indeed, digital nudging, a method by which user interfaces are designed to guide people’s choices and behaviours in a digital environment, is already used extensively in digital platforms. Although it may seem harmless at first glance, digital nudging can undermine our ability to think critically and independently by taking advantage of our psychological vulnerabilities and employing persuasive tactics. A classic example of a digital nudge is using a default option mechanism, which in some cases may not be in the best interest of the consumer. For example, default privacy settings in an app may lead to users unknowingly sharing more personal information than they would prefer.

Another consequence of the digital era is micro-targeting with personalized content. This is driven by data hoarding, AI algorithms and recommendation systems. Digital platforms are able to do this as a result of acquiring vast quantities of data about our personal preferences. Using this data, they have created massive online echo chambers that shield us from diverse perspectives and paint pictures of the world that will keep us hooked on the platform. The filtering of information based on algorithms limits our exposure to differing perspectives and thus reduces our ability to form nuanced, informed views. In extreme cases, algorithmic tunnel vision has led to the destabilization of societies. We saw this most recently with disinformation spreading rapidly on digital platforms leading up to the January 6, 2021, riots at the US Capitol.

Other cases have shown the devastating harms that excessive social media digital platform use has had on users — young users in particular. In 2017, Molly Russell, a 14-year-old adolescent from the United Kingdom and frequent Instagram user, took her own life. In 2022, a British court concluded that Russell died “while suffering from depression and the negative effects of online content” and that the internet “affected her mental health in a negative way and contributed to her death in a more than minimal way.”

In the workplace, concerns are growing about productivity tools that arguably and overtly infringe on workers’ rights. For example, it was recently reported that Amazon uses AI surveillance on its delivery drivers and flags them for any violations. More subtle examples include the monitoring of video calls and meetings to gauge employee engagement.

Moreover, there is an urgent need for greater transparency, accountability and understandable informed consent surrounding the design, capabilities and use of these technologies.

In 2022, US Surgeon General Dr. Vivek Murthy described the country’s top health concern — the “staggering” level of mental health problems among the population — and identified social media as a significant contributory factor to these problems. In May 2023, he issued a public advisory stating “there are ample indicators that social media can also have a profound risk of harm to the mental health and well-being of children and adolescents.”

To protect ourselves from these encroachments, we can draw lessons from history. In Canada, the government and regulators require that producers print warning labels on cigarette packs and alcohol bottles. They’ve instituted restrictions on these and other hazardous products’ use and advertising, to protect consumers’ health and safety. We have standards, codes of conduct surrounding advertising to children, and rating systems for films and television shows to guide parents and viewers as to their content and age appropriateness.

Likewise, we enact laws to protect our physical safety and our physical freedoms. It is illegal for someone to trespass onto your property, for example, and invade your privacy. So, why would we allow technology to penetrate the deep recesses of our minds and our children’s minds to hijack our thoughts, without limits or repercussions? Clearly, digital harms require the same standard of vigilance and care as physical harms.

Moreover, there is an urgent need for greater transparency, accountability and understandable informed consent surrounding the design, capabilities and use of these technologies. Warning labels, digital watermarks and disclosure requirements can ensure users are aware that content or services they access are the output of, or delivered via, AI and of the potential psychological manipulations that might be at play. Technology companies must be required by law to be more transparent around their intentions, their practices, their technology designs and implementations. There should be stronger restrictions around targeting youth.

This is not a demonization of the industry (I, myself, am a technologist, innovator and entrepreneur). Rather, this is a call for self-reflection and strong action to right the ship. Government, industry and the public at large must fully recognize and acknowledge the unintended (and, in some cases, possibly intended) harms that have come along with the productivity and consumer gains of technology. The common goal should be to preserve diversity of thought, critical thinking and independent decision making — the foundations of a healthy democracy and advanced society.

As individuals, we must educate ourselves to become more discerning consumers of information. We must recognize the importance of preserving our cognitive liberty. We must expect higher standards from the private sector and demand that our governments build a coherent global regulatory framework to protect our fundamental rights, including the right to think what we think, uninfluenced by invisible digital hands.

It’s time that we unmask the threat of digital technologies just as we do so diligently for physical goods. Perhaps one day in the distant future, a human writer will say, “It’s hard to believe there was a time when digital technologies were seen as harmless and that we had no laws to regulate their use.” We owe it to ourselves and to the generations that follow to demand a digital future in which we remain free to think without interference.