The following is the edited text of a background memo prepared for the Council on Foreign Relations, New York, NY, for the Council of Councils Annual Conference taking place May 7–9, 2023.

Artificial intelligence (AI) constitutes a general-purpose technology — perhaps one with transformative potential not seen since the Industrial Revolution. The technology’s power to spur both beneficial and harmful change is enormous. Now is the time to understand collective interests around AI and to find ways to build governance in the human and global interest.

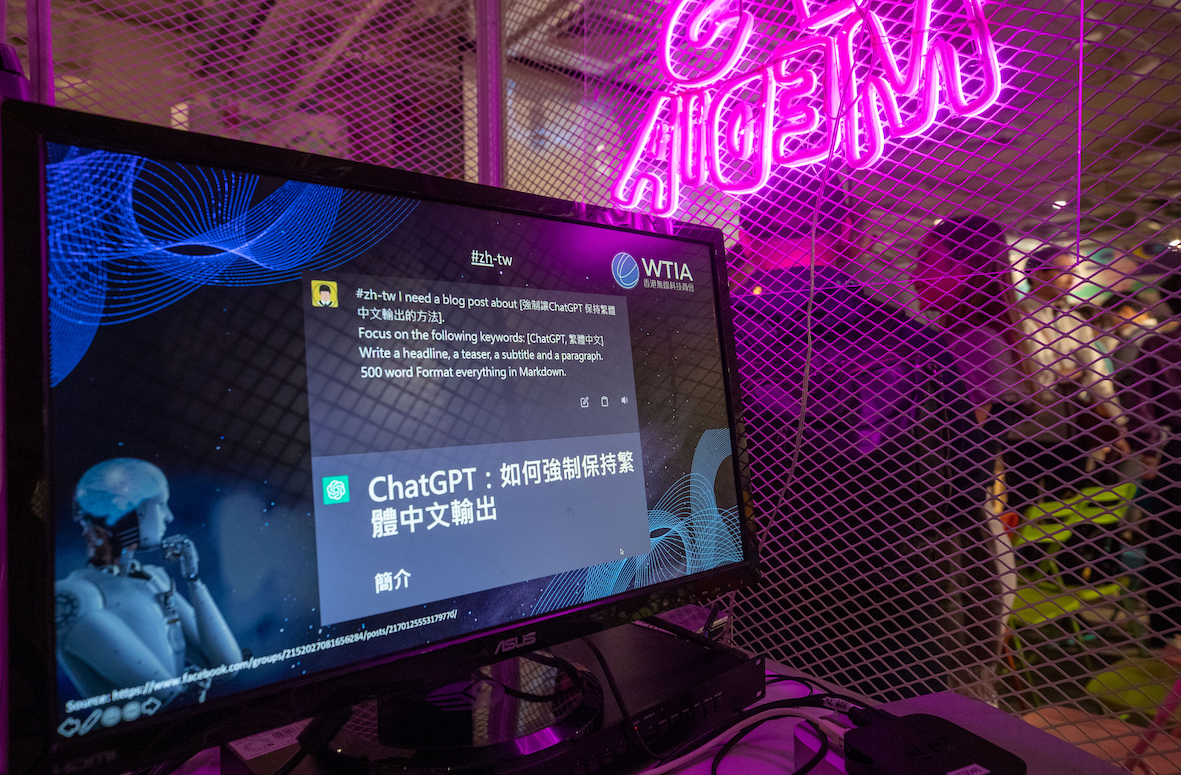

Advances in user-friendly generative AI, which can produce text, images, audio and synthetic data, have unleashed a frenzy of new initiatives and captured mainstream attention. The best example is ChatGPT, which reached about 100 million users in two months — an unprecedented feat that took TikTok some nine months and Instagram two and a half years. ChatGPT’s popularity and its checkered performance (it can generate convincing but completely incorrect material at times) have opened the floodgates of debate on the benefits and risks associated with AI. It has raised long-standing ethical and legal concerns related to privacy and harmful biases. It has also created new issues. No policy areas — not democratic integrity, labour markets, education, market competition, art creation or science — will remain untouched.

Beyond domestic policy, AI will become increasingly central in geopolitics and international cooperation, changing the worlds of finance, climate change, trade, development, and military operations and security. But even with that shift, only a patchwork of initiatives exists for data (the raw material of AI) and digital issues. Those initiatives are scattered across nations and do not provide a foundation for a unifying regulatory framework upon which to build international AI regulation.

Despite active and ongoing discussions over the last decade within the United Nations, the Group of Twenty (G20), the Organisation for Economic Co-operation and Development (OECD), and elsewhere, no consensus has emerged on how to approach the digital transformation taking place or on how to approach international data regulation. No global institution has a substantive mandate to develop a policy model or regulations, making it difficult to initiate an international framework. The hope was that the G20 would establish a starting point for global digital norms and standards on data and digital issues, and general G20 AI principles were agreed to in 2019; however, the G20 has made no substantive progress on governance to date. India’s G20 presidency in 2023 is leading the way on digital public infrastructure, particularly for developing countries, but to land any action on governance will be challenging. The needs of poorer countries will be integral to governance models, lest digitization and AI further increase inequalities between nations as well as within them. Africa, for example, can benefit from AI under the right conditions.

The European Union took a proactive stance by implementing the General Data Protection Regulation (GDPR) in 2018, which governs the collection, processing and storage of EU citizens’ personal data. Companies operating in the European Union that fail to comply with the GDPR’s rules face significant financial penalties. Furthermore, each EU member state is required to create a data protection authority to monitor and enforce the GDPR. That sweeping law created a so-called Brussels Effect, whereby some non-EU countries followed aspects of GDPR in their own privacy legislation. Despite those developments, significant differences on first principles for managing cross-border data and digital flows remain. At the philosophical level, the European Union places a primary focus on individual data rights, while the United States is concerned about over-regulation stifling innovation, and China’s model is massively state-centric.

The meteoric rise of ChatGPT has thrust the urgency of those policy issues into public light. Some AI experts and policy makers argue that, left unregulated, future AI developments could pose an existential risk to humanity, and that AI therefore requires international regulatory efforts on par with those aimed at mitigating climate change. Those voices have called to pause AI experimentation at scale pending the establishment of clear governance rules, or even to “shut it all down” for fear of an extinction-level event.

Not surprisingly, AI’s potential has become significant to national security and industrial policy planners at the centre of national governments. China launched its AI plan in 2017, with the clear ambition of becoming the undisputed global leader by 2030 in AI technology, research and markets, while also taking advantage of a centralized system to concentrate resources and leverage operational alignment. The United States took an important strategic step with the National Artificial Intelligence Initiative Act of 2020. That act established the National Artificial Intelligence Initiative, which seeks to ensure continued US leadership across the whole range of AI research and development fields; prepare the US workforce, economy and society for the integration of AI; ensure federal agency coordination; and lead the world in using trustworthy AI systems in public and private sectors. In addition, the National Security Commission on Artificial Intelligence produced its final report in 2022, concluding that the United States should act now on AI to protect its security and prosperity and safeguard democracy.

The European Union is currently considering an ambitious AI Act that would include stronger rules for data, transparency, accountability and human oversight, and frame AI approaches to vital sectors such as health care, finance, energy and education.

Overall, the United States and China have some similar strategic objectives, fostering conditions for direct competition. Some of that is already apparent: the 2020 CHIPS for America Act calls for the United States to secure AI research and development and supply chains. China appears to be initiating security reviews of foreign tech companies for cyber risks to ensure its own supply chains.

The European Union is currently considering an ambitious AI Act that would include stronger rules for data, transparency, accountability and human oversight, and frame AI approaches to vital sectors such as health care, finance, energy and education. The European Parliament was scheduled to vote on the draft act by the end of April 2023, although some EU law makers have called for further action on generative AI and for a summit of world leaders. The Algorithmic Accountability Act was reintroduced in the US Congress in February 2022 and has many of the same core objectives as the European Union’s AI Act. The Biden administration also released the Blueprint for an AI Bill of Rights in October 2022. Other countries, including Brazil, Canada, Japan and Korea, are reviewing national AI legislation, as are several US states. Over the past year, China has been instituting nationally binding regulations in targeted areas, including an algorithm registry and rules for generative AI. Following the release of Alibaba’s generative AI chatbot tool, Chinese regulators will now require Chinese tech companies to submit generative AI products (for example, online chats and deepfake generators) for assessment before public release. If artificial general intelligence applications come to fruition, AI governance may yet prove to be as challenging for autocratic governments as it is for democratic ones.

The OECD maintains an AI Policy Observatory that is tracking 800 AI policy initiatives across 69 countries (although the observatory found that only a handful of initiatives were evaluated or reported on after the fact). A number of other international initiatives are also emerging, including the UN Educational, Scientific and Cultural Organization’s work on global ethics for science and technology, which shares information and helps build capacity; the UN AI for Good summits on AI resolutions to advance the Sustainable Development Goals; and the OECD Principles on Artificial Intelligence, which provide a foundation for innovative and trustworthy AI that respects human rights and democratic values. However, none of those initiatives are advancing international regulation or building a concrete governance model. Nor have they been empowered to do so.

Given this context, policy makers have limited options to pursue global governance for AI. The Group of Seven (G7) will discuss this again in 2023, and the Japanese presidency hopes to agree to an international framework for AI regulation and governance. However, even the G7 is not of one mind on how to proceed to regulate in these areas. Essentially, two schools of thought have emerged: The first group, led by France, Germany and Italy, takes a broad, law-based approach and is modelled on regulation under the European Union’s AI Act. Canada could also land in that first group, depending on how its legislation evolves. The second group, led philosophically by the United States, Japan and the United Kingdom, looks to identify more narrow points that require regulation and to achieve legislation via softer legal tools (although the United States’ proposed Algorithmic Accountability Act would constitute harder regulation if passed). If the G7 can reach agreement on a framework for international AI regulation, it could then broaden collaboration by expanding to the G20 and beyond. However, even the G7 struggles to reach agreement, given the high-level and political nature of the discussions among ministers and leaders on such a technical topic, and the many different elements of AI itself.

A complementary approach, which would be more bottom-up, could have potential, based on the lessons of the global financial crisis and the creation of an informal coordinating group, the Financial Stability Board. Indeed, a G7 leader-empowered “Digital Stability Board” or another informal, technical-driven group could build multi-stakeholder processes to coordinate work on prioritizing standards for AI and ensure it keeps up with the latest changes in technology.

Such an approach would not require establishing a new organization or reforming the mandates of existing ones. Most of the pieces are already in place, and existing bodies could be leveraged, including the Forum on Information and Democracy and groups such as the Open Community for Ethics in Autonomous and Intelligent Systems (OCEANIS), which was created by the Institute of Electrical and Electronics Engineers, standards development organizations, and the private sector. The new body could also build on existing work, such as that of the EU-US Trade and Technology Council’s Joint Roadmap on Evaluation and Measurement Tools for Trustworthy AI and Risk Management and the OECD’s Framework for the Classification of AI Systems, among others. The Global Partnership on Artificial Intelligence, launched via the French and Canadian presidencies of the G7, could also play a role in bridging ideas into practice.

An abridged version of this piece has appeared in The Hill Times.