The development of regulatory frameworks and standards for artificial intelligence (AI) has been hugely outpaced by the mind-boggling growth of AI research and applications. While governments and corporations around the world are rushing to use AI as a miracle weapon in their quest to win the global technology race, the fundamental building blocks of AI governance are not in place.

Many billions of dollars in research and corporate investments have produced a boom in AI applications across a wide array of activities, both in civilian and defence and security contexts. AI functions such as machine vision, speech recognition and translation, among others, are being used in smart and interactive robots, cars, drones, medical equipment and weapon systems. Fundamental limitations remain, however. After more than 60 years, something even remotely akin to human intelligence is not within reach. We still have a long way to go, both on research and governance.

Throughout AI’s history, intense competition between very different science and engineering methodologies has shaped the research as it cycled through periods of progress and optimism (“AI fever”) followed by setbacks and pessimism (“AI winters”). AI’s big push came with machine learning, a process whereby a huge volume of data is fed into an algorithm that uses essentially a generalized strategy for learning, looking for patterns and then applying them. The 2012 ImageNet competition brought the decisive breakthrough: deep learning through neural networks, a technology developed by Geoffrey Hinton and colleagues from the University of Toronto.

Yet deep learning neural networks are already yesterday’s frontier; they are now generating decreasing returns. An intense search has launched within the global AI community for hybrid models that “integrate deep learning, which excels at perceptual classification, with symbolic systems, which excel at inference and abstraction.” Symbolic AI — the dominant AI paradigm until the late 1980s — is now being resurrected as the new frontier in AI as researchers strive to accelerate progress from data to knowledge and understanding. Cutting-edge research is focused on building a cognitive platform for decision making. Massive efforts are under way to improve machine-learning techniques, such as generative models, unsupervised and reinforcement learning, and the design of advanced, specialized AI chips. At the same time, AI research is, with new vigour, addressing unresolved questions of symbolic AI. This research includes, for instance, learning with less data, deep questions answering, AI for and on the cloud, combining AI with quantum computing, and knowledge exchange with cognitive science and neuroscience. All of these activities signal greater space for addressing society’s most pressing needs in health care, climate change and inequality.

Freezing out China from global AI governance would cause irreparable collateral damage to all members of the global AI community.

In short, discovering and implementing viable new AI technology paradigms remains a wide open frontier. But equally important is the largely unresolved struggle to design and implement robust regulatory frameworks for AI governance. There are plenty of initiatives, by both governments and corporations, but they still have a long way to go to effectively regulate the governance of AI.

This challenge becomes clear when we take a closer look at the fundamental objectives. The first is that we need AI governance to determine how the gains from AI are shared, especially with regard to data. As discussed in CIGI’s essay series Data Governance in the Digital Age, we thus need to know:

- who owns the data and what these data rights entail;

- who is allowed to collect what data;

- what we can say about the quality of the data;

- what the rules are for data aggregation; and

- what the rules are for data rights transfer.

A second, equally important task of AI governance is to develop institutions and regulations not only to reduce the negative impacts of AI — which could undermine inclusive growth, sustainable development, human-centred values and fairness — but also to increase transparency, security and safety, and accountability.

With regard to these two fundamental objectives of AI governance, current initiatives are primarily concerned with laying out basic definitions and legal frameworks. Europe’s General Data Protection Regulation (GDPR) is different — this law has codified new standards for data privacy and security. But it remains to be seen whether the GDPR regulations can be implemented effectively, and whether this uniquely European approach will be accepted by others. In fact, while China has indicated that it considers the GDPR to be a useful trial benchmark, the American information technology industry remains vehemently opposed to it.

This brings us to a third important task of AI governance: to ensure that national AI policies facilitate rather than constrain international cooperation, through knowledge sharing and harmonization of national AI governance standards. There is every reason to avoid a worst-case scenario where national governments would pursue their own AI governance in isolation, as this would quite seriously limit progress in AI research.

Nations Differ in Their AI Research Trajectories

Success in AI research and applications requires a set of extremely demanding capabilities and assets. Nations differ in their mastery of these success factors, and hence they pursue distinct AI research strategies. Vast data sets and sophisticated algorithms are two essential prerequisites. But equally important is a third component: advanced specialized AI chips are needed to both increase computing power and storage and decrease energy consumption. Companies that have access to leading-edge AI chips are essentially in the express lane, where improvements continue to be rapid and mutually reinforcing.

This leads us to the fourth task for AI research. Well-coordinated efforts to improve the quality of data through standardization and enhanced capabilities for data analytics are essential to success for those engaged in AI development.

A fifth critically important objective is the securing of a large pool of highly educated and experienced AI researchers and engineers who can in turn foster and draw on the talents of students from all over the world. Restrictions to their free movement across borders is anathema to progress in AI research.

Finally, to improve the speed of data transmission for the training of algorithms, a successful transition to fifth-generation telecommunications infrastructure is necessary. If data cannot move where it is needed, it is useless.

Debates on AI governance need to keep in mind that countries differ in their AI research trajectories. Take the United States, for example: apart from data and algorithms, America’s AI leadership is based primarily on advanced, specialized AI chips. The markets for leading-edge AI chips are tight oligopolies, controlled by a handful of US companies. Equally important, US companies control the software for integrated circuit design (called Electronic Design Automation/EDA tools), and are (with the important exception of ASML, a Dutch company) the lead players in semiconductor production equipment. In light of such an extremely unequal distribution of market power, a handful of US market leaders can shape technology trajectories, standards and pricing strategies for AI chips.

Both Europe and China are way behind the United States in the critically important AI chip ecosystem. The real challenge for Europe is to define a handful of AI niches that require domain knowledge for specific applications like health or transportation systems. Aggressive competition policy helps, as do regulatory frameworks such as the GDPR and the forthcoming Digital Services Act, which will set new rules regarding digital platforms’ responsibility for illegal content and disinformation online. AI standardization is another area where the European Union should have some leverage. However, all of these regulatory efforts need to be supported by joint efforts to strengthen domestic technological and management capabilities.

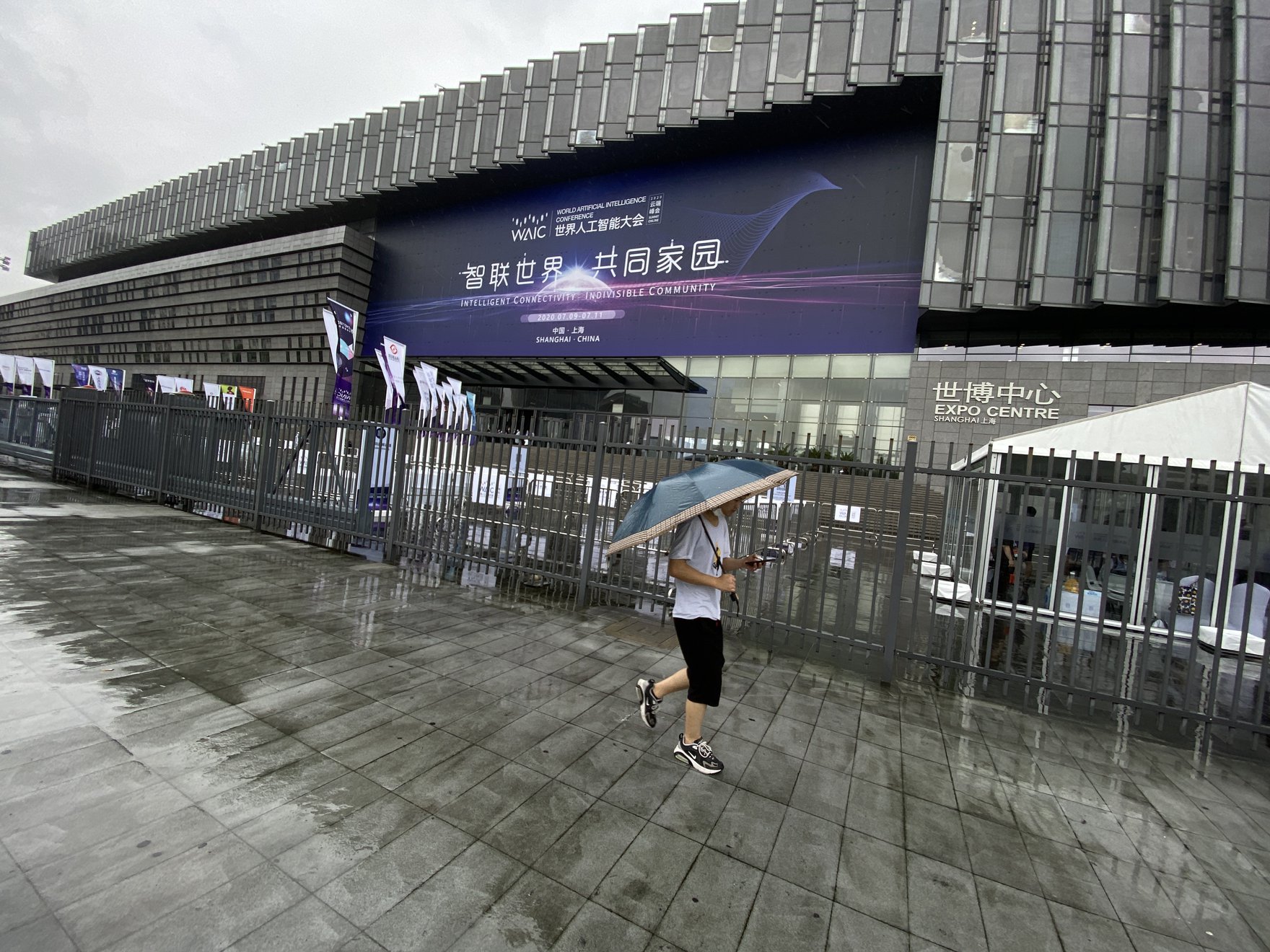

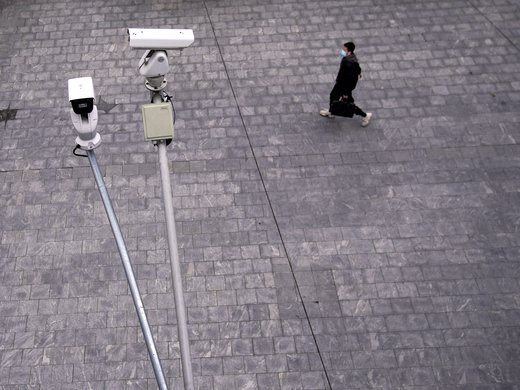

In China, public research institutions conduct AI research into both neural networks and symbolic AI (some of it at the frontier). Interactions with industry, however, remain limited, as industry’s primary concern is to forge ahead in China’s rapidly growing mass market for AI applications. China’s AI strategy has emphasized data as a primary advantage. With fewer obstacles to data collection and use, China has amassed huge data sets, the likes of which do not exist in other countries. It was assumed that China could always purchase the necessary AI chips from global semiconductor industry leaders. Until recently, AI applications run by leading-edge major Chinese technology firms were powered by foreign chips, mostly designed by a small group of top US semiconductor firms. However, this global knowledge sourcing was not supported by a robust body of domestic research. With the escalation of the US-China technology war, this lack of research has become a major vulnerability for China’s AI industry. China’s reliance on global knowledge sourcing was actually in line with the “gains from trade” globalization doctrine that was widely shared before the outbreak of trade and technology wars and the coronavirus pandemic and argued by, for example, Paul Romer in 1994, with his insights more recently popularized by Richard Baldwin in widely disseminated policy prescriptions.

In short, there is no “one best way” to approach AI research and deployment. China, Europe and the United States can only play the cards they have, and diversity will continue to shape their unique AI development trajectories. And, as theories of innovation and complexity have shown (see, for example, Robert Axelrod and Michael D. Cohen’s Harnessing Complexity: Organizational Implications of a Scientific Frontier and Cristiano Antonelli’s Handbook on the Economic Complexity of Technological Change), diversity can facilitate knowledge exchange and cooperation.

What Scope Is There for Cooperation?

This persistent diversity of national AI research trajectories indicates that resolving AI governance challenges through cooperation will not be easy. The Brookings Forum on Cooperation on AI can play an important trailblazer role.

It makes sense to start with a plurilateral approach, focusing on collaboration between Australia, the European Union, Japan, the United Kingdom and the United States — countries who share at least some common values and institutional histories. But at some stage, the Brookings Forum on Cooperation on AI needs to lay out a longer-term strategy that defines interactions with other countries, such as China, India, South Korea and Taiwan, Province of China. These countries already play an important role in the development of AI research, patents and standards. Freezing out China from global AI governance would cause irreparable collateral damage to all members of the global AI community.

But more fundamental issues are at stake. Due to the US-China technology war, international governance of AI that is based on state representation has ceased to be effective. Gideon Rachman’s September 21 contribution to The Financial Times said it well: “A rules-based order is giving way to something that feels more like 19th-century imperialism.” As for the US government, it is further expanding the exterritorial reach of US law through its Clean Network initiative, while neglecting UN organizations. China, on the other hand, has successfully occupied the levers of command and control in the International Telecommunication Union and other UN organizations. The spread of technology warfare is severely handicapping current efforts to develop international standards for AI. As established rules of trade are broken, mutual distrust and rising uncertainty are disrupting international trade, investment and knowledge exchange, especially in high-tech industries such as AI. In addition, policies to control and slow down the spread of COVID-19 through border closings have further increased barriers to global knowledge sharing.

As a result, international AI standardization based on state representation is no longer able to keep pace with the breathtaking speed of AI research and applications. Geopolitical antagonisms threaten to politicize the work of technical committees. Gone are the days when international technical standardization was all about a cooperative search for technical solutions to benefit transnational corporations, trade and technological innovation. Alternative approaches are emerging. For example, the Institute of Electrical and Electronics Engineers’ global governance model seeks to establish a technical, merit-based, one-entity-one-vote standardization approach, while trying to bypass constraints by local laws. But overall, search and experimentation still dominate these new initiatives.

At the same time, open-source platforms and communities play an increasingly active role in AI standardization. Technical standard setting is painfully slow, while open-source projects tend to happen amazingly fast. In response, many AI tools have shifted toward open-source environments to provide software-based interoperability. In addition, open-source communities tend to avoid traditional fora out of fear that geopolitics may trump ethical and moral principles. For instance, at the recent Spark + AI Summit 2020, Databricks announced that its open-source machine-learning platform MLflow is becoming a Linux Foundation project.

For many AI research groups, an important motivation for relying on open-source platforms is protection against the exterritorial reach of US technology export restrictions. Here is a good example, provided by the Linux Foundation: “Open source technologies that are published and made publicly available to the world are not subject to US export restrictions. Therefore, open source remains one of the most accessible models for global collaboration.”

Everywhere, companies and research labs, as well as university researchers and students, are using this open-source model of collaborating in AI research and technology development. The rapid expansion of open-source platforms and communities is already transforming the organization of AI research and governance. It is high time to systematically explore how these new hybrid forms of cooperation work, and what needs to be done to improve access for smaller companies and research labs across the globe.

There is no guarantee that open-source platforms on their own will improve the distribution of AI research capabilities and assets. As highlighted in the State of AI Report 2020, only 15 percent of AI research papers publish their code, and these are predominantly from academic groups. Global platform leaders typically embed their code in their proprietary frameworks that cannot be released. New approaches to AI governance are needed to address this important, unresolved issue.

Overall, however, there is reason for cautious optimism. Despite the disruptions caused by technology war and the global pandemic, the inherent vitality of global AI research and development communities keeps finding ways to overcome the barriers that are currently suffocating government-to-government cooperation. These new forms of informal cooperation will exert pressure on policy makers to create new regulatory frameworks for strengthening the role of open-source platforms and communities.