Encrypted messaging applications (EMAs) such as WhatsApp and Telegram are growing in their political significance but are even more difficult to research than more public sites like Facebook, Twitter and YouTube. This shift to platforms protected by end-to-end (E2E) encryption poses a major challenge for those hoping to understand and combat global disinformation operations — which our research shows have also migrated to these private spaces. Because EMAs have less transparency with regard to message content, it may seem like the problem has been reduced. However, this clearly isn’t true. Moves like Meta’s decision to bring encryption to all of its platforms by 2023 risks sweeping society’s deep-set informational problems under the digital rug. Moreover, the ongoing war in Ukraine is underscoring the importance of EMAs as tools for both civilian activism and Russian disinformation operations.

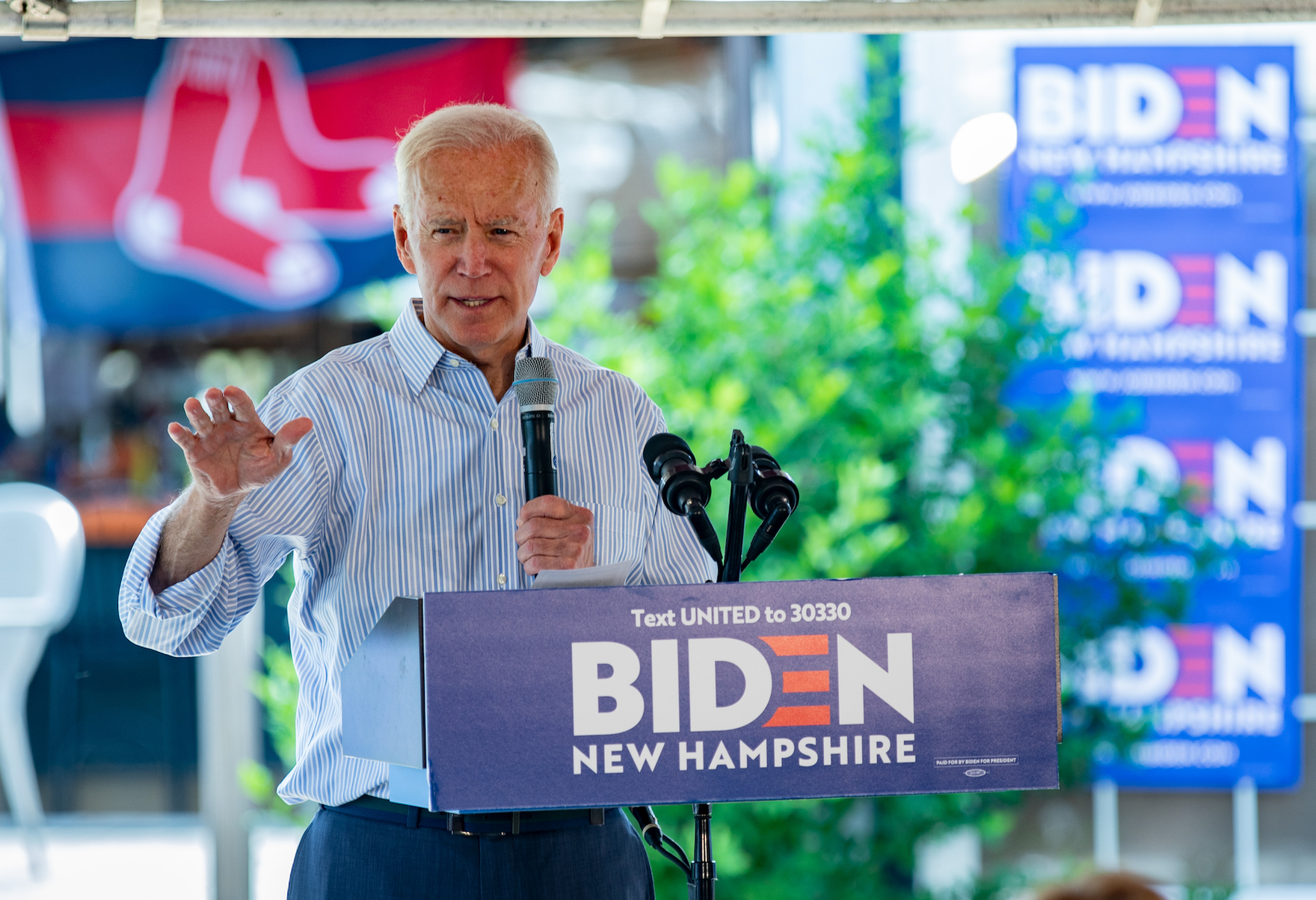

In 2020, then presidential candidate Joe Biden was added to the slew of politicians who had recently being denounced over Telegram and WhatsApp for alleged “anti-Catholic” views — despite Biden himself identifying as a lifelong adherent to that faith. Our team at the Propaganda Research Lab has been tracking these, and similar, allegations against Biden and other candidates for office across the political spectrum. Many such disinformation operations were specifically aimed at swaying the votes of people belonging to various US Hispanic communities — many of them devout Roman Catholics and most of them living in battleground states. Crucially, many of these groups use EMAs as primary means for keeping in contact with friends and family and — as indicated above — conversing about politics.

Our work suggests that such claims are tied to several ongoing innovations in digital disinformation. Specifically, we have identified four main themes among disinformative electoral propaganda efforts over EMAs aimed at US diaspora communities: the sowing of confusion via translational ambiguities; the leveraging of falsehoods to redraw ideological fault lines; the use of religion to sow doubt about candidates’ views; and the oversimplification of complex perspectives, policies and procedures to alter voting decisions.

Overall, it is likely that the 2022 US mid-term elections will be seriously challenged by manipulative, false, political messaging over EMAs that leverages these themes to target diaspora communities and other segments of the country’s population. Computational propaganda is, simply put, increasingly flowing in private spaces and targeting the most vulnerable in our democracies — making it harder to track, understand and uncover, as well as potentially more damaging to civic engagement and equity. What is happening over EMAs in the United States, according to our research, also seems to be happening in large democracies like Brazil and India. In order to counter these global attempts to manipulate emigrant communities, we must act now.

It is likely that the 2022 US mid-term elections will be seriously challenged by manipulative, false, political messaging over encrypted messaging apps … to target diaspora communities and other segments of the country’s population.

While tech companies are still catching up with their capacities to counter disinformation in languages other than English, propagandists have been refining multilingual, multicultural manipulation strategies in social media spaces for years. In addition to languages being inappropriately translated on occasion, both dis- and misinformation have proven borderless, as content is often created in one country with the intent to influence communities in another. In other instances, it is clear that while the messages might have originated or been produced in one country, their distribution tactics were borrowed from popular forms of sharing information in a given target community’s country of origin — for instance, distribution might rely on audio/voice and video messages in addition to text. The potential consequences for rising disinformation aimed at diaspora communities are incredibly concerning. On an individual level, disinformation might illicitly impact a person’s vote or decision to engage politically. On a societal level, disinformation risks harming and alienating minority communities and, ultimately, further degrading democratic systems — which rely upon the votes of all people, and particularly those who have less of a voice in mainstream politics.

In order to be better prepared, we need short-, mid- and long-term responses. For the short term, the support of real-time fact-checking is vital. For what it’s worth, there is preliminary evidence that fact-checking and flagging content are more impactful on WhatsApp than on Facebook. Importantly, researchers have built a successful “WhatsApp Monitor” tool for electoral contests in Brazil and India. Tools like this are designed to work alongside communities who use them to detect viral false information content early on, and hence to facilitate fact-checking. They often include customizable push mechanisms that will publish the fact-checked result back to the groups where the false content was originally received.

Tools like this should be accompanied by bottom-up, community-centric programs, because the utilization of familiar relationships is particularly relevant in these more private, intimate spaces. Digital literacy programs with a specific focus on diaspora communities have been able to achieve good results, in the sense that better understanding and learning practical ways to search for additional information have empowered many. For example, in the Vietnamese American community, an organization called Pivot has its own website called VietFactCheck for dispelling false information. It works to equip community leaders with tools and resources and, ideally, preventive measures that aim to build societal resilience.

In the mid term, we need to work harder to understand the significance of encrypted messaging apps for minority communities, to create more inclusive democracies.

In addition, research shows that interventions based on that metadata show particular promise. Among the metadata is information such as forwarding patterns and frequency. For example, metadata-based forwarding limits that WhatsApp introduced seem to inhibit the spread of misinformation. However, it is important to evaluate these interventions independently in order to distill sensible approaches and best practices for use on other platforms. This is also necessary given concerns around WhatsApp’s own usage of the collected metadata.

In the mid term, we need to work harder to understand the significance of EMAs for minority communities, to create more inclusive democracies. Addressing this question is a consistent task for political systems, many of which are defined by histories of exclusion or marginalization, such as Canada and the United States. Journalists and policy makers should carry these insights into discussions about public trust and move away from top-down models that derive from the points of view of people in power. Legislative discussions about regulating the tech sector and content moderation should include representatives from minority groups so that their experiences and opinions inform these discussions.

The current crisis of public trust is also evident among other demographics in Canada and the United States. Populist rhetoric has found fertile soil in many communities. Singling out diaspora communities should not be viewed as an effort to “educate” some parts of the population. One could argue that diaspora communities are sometimes more vulnerable to populist rhetoric precisely because they demonstrate a higher degree of skepticism and a critical stance with regards to official news sources. In other words, marginalized communities are often well-placed to apply critical thinking when consuming the media. Any policy recommendation intended to strictly reassert the legitimacy of official news sources fails to address the challenge of trust and inclusion. In the long term, we need to design digital literacy initiatives that initiate an inclusive dialogue that can be truly interactive, so as to begin the process of (re)building this public trust.