This transcript was completed with the aid of computer voice recognition software. If you notice an error in this transcript, please let us know by contacting us here.

Beeban Kidron: Is your kid ready at six, at eight, at 10, to be shoved out the door with no instructions, and the oncoming traffic, and they go, "Oh, don't be so ridiculous." And I go, "Well, why in plain sight have we allowed a system so important, so central, so powerful, to do just that?"

Taylor Owen: Hi. I'm Taylor Owen, and this is Big Tech.

Like many of us, I spent a lot of time at home this year, which, as I'm sure everyone knows, can be tough, but one silver lining is that I've gotten to spend a lot of time with my son, and has been pretty amazing to see him immersed in all these new hobbies, and the thing that he's totally hooked on right now is origami. So when he first expressed interest in this, we went out and bought him a couple of books, but he's only seven, so his origami skills are significantly better than his reading skills, which means that it required a lot of parental involvement. So we looked around for some more self-directed instruction and ended up on YouTube.

[CLIP]

Hello. I'm going to show you how to make a paper origami crane-

SOURCE: Origami Tsunami YouTube Channel https://youtu.be/KfnyopxdJXQ

“How To Make a Paper Crane: Origami Step by Step – Easy”

May 7, 2014

Taylor Owen: As with almost any other hobby you can think of, there are thousands and thousands of hours of origami tutorials on YouTube, and our son started devouring them.

[CLIP]

... this corner and fold it down-

SOURCE: Origami Tsunami YouTube Channel https://youtu.be/KfnyopxdJXQ

“How To Make a Paper Crane: Origami Step by Step – Easy”

May 7, 2014

Taylor Owen: We'd leave him in front of the computer, and he'd re-emerge an hour later holding an elaborately folded swan. And he started getting into magic tricks too. He'd memorize these really complicated sequences and practice them endlessly. It was honestly pretty amazing. And then one day, I went in to check on him and found him trying to hypnotize himself,

[CLIP]

Follow the pocket watch. You're feeling very tired.

SOURCE: Cara Institute of Advanced Hypnosis YouTube Channel https://youtu.be/waf2w7bB0dE

“Hypnotized to sleep by a pocket watch - sleep triggers by finger snaps”

September 9, 2012

Taylor Owen: The YouTube algorithm, which is infamous for its tendency to direct people to more and more extreme content, had taken him from a disappearing coin trick to a video on self hypnotism. When I turned it off, he looked at me and said, "But dad, it will get me the best sleep of my life.",

[CLIP]

Close your eyes and sleep.

SOURCE: Cara Institute of Advanced Hypnosis YouTube Channel https://youtu.be/waf2w7bB0dE

“Hypnotized to sleep by a pocket watch - sleep triggers by finger snaps”

September 9, 2012

Taylor Owen: The irony of all this is not lost on me. I'm, more than many, know that the internet is not always a great place for adults let alone kids. At the same time, there's clearly some amazing content on there, so the question then becomes, how do we give our kids access to the good stuff while protecting them from the bad? It's a question that Baroness Beeban Kidron has been wrestling with for some time now. Beeban is a filmmaker, policymaker and activist. She's directed more than 20 movies, including Bridget Jones: The Edge of Reason. In 2013, she directed a documentary called InRealLife, where she followed around a group of teenagers, as they dove deeper and deeper into the internet. It ended up being a pivotal moment in her career. After seeing the dangers of some of these digital technologies firsthand, Beeban has become a tireless advocate for better regulation around kids in tech. And she's now a member of the House of Lords and sits on their Democracy and Digital Technologies Committee.

Last year, she was instrumental in passing the UK's Age Appropriate Design Code, which lays out 15 standards for how platforms should treat kids. When a draft of the code was put forward, Beeban said, "Children and their parents have long been left with all the responsibility, but no control. Meanwhile, the tech sector, against all rationale, has been left with all the control, but no responsibility, the code will change this." Beeban has some fascinating ideas about how we can make the internet a safer place for kids and how in doing that, we might actually make the internet safer for everyone. Here is Baroness Beeban Kidron.

Taylor Owen: All right. Do I call you Baroness?

Beeban Kidron: No, you call me Beeban.

Taylor Owen: Beeban Kidron, welcome to Big Tech.

Beeban Kidron: Glad to be with you.

Taylor Owen: So I watched a talk you gave eight years ago, recently. That was, before you got this heavily involved in the children in technology issue.

[CLIP]

Beeban Kidron: Evidence suggests that humans in all ages and from all cultures create their identity in some kind of narrative form-

SOURCE: TED YouTube Channel https://youtu.be/J-LQxBbQPTY

“Beeban Kidron: The shared wonder of film”

June 13, 2012

Taylor Owen: Where you were talking about the power of cinema. And you seem to be lamenting the fact that, while cinema was the last century's most influential art form, Hollywood was still caught in this moment of sensationalism and ahistoricism, and that that was affecting our kids, that they were being caught in this entertainment industry that wasn't providing what cinema could provide.

[CLIP]

Beeban Kidron: And what narrative, what history, what identity, what moral code are we imparting to our young?

SOURCE: TED YouTube Channel https://youtu.be/J-LQxBbQPTY

“Beeban Kidron: The shared wonder of film”

June 13, 2012

Taylor Owen: And I'm wondering with all the changes in technology since, and the way we consume and produce media, whether you still think that's the case.

Beeban Kidron: So it's a really interesting question, and I think that in that particular talk, I was lamenting something that was about a collective experience, and so the way that we consume content and narrative, has become very individual, has become island, and it's much much harder to have a collective experience, and I think there's a loss there, and think that perhaps some of the mesmeric qualities and intellectual ambition-

Taylor Owen: What do you mean by mesmeric?

Beeban Kidron: So when you go into a cinema, there's a moment, isn't there? There's the anticipation that the lights go out, the ads come on, the names fill the screen, the music... And you go down into a sort of a dream state and a sort of a... I don't know how to say it, but a giving over to this experience for the duration. That was something that I saw play out on hundreds and hundreds of children as an incredibly positive force. And many of them said to me, because I ran this huge film club, which had tens of thousands of kids in it. So I had a lot of experience of talking directly to them. And a lot of them said to me, "That was quite time miss. I haven't been that still for a very long time. They move me around the classroom at school. They move me around my life. I am nudged and pushed." And that experience was overwhelming in a positive sense. So I think that's what I was talking about, and it does absolutely feed into bite size, rapid, nudge technology and so on. But I wouldn't want to lament the idea that there was only one way, and then this is 20th century storytelling in the 21st century. I think there's something much more profound about the problem of digital technologies, and there's something rather marvelous about that, but they're not in absolute direct opposition to each other.

Taylor Owen: Yeah. I mean, I guess, even though we're in a moment where, long form storytelling in film is having a bit of a resurgence with the streaming platforms and the multi-season dramas and all of that, that's not collective, so that's still not getting at this aspect that we're not necessarily experiencing that together.

Beeban Kidron: No.

Taylor Owen: All right. So moving to this topic of children in digital technology, your first step into that, soon after actually that talk that I watched, was the documentary, InRealLife.

[CLIP]

Male Youth: I text them a lot. We tweet each other and we talk on Google Talk a lot.

Beeban Kidron: And have you had a boyfriend in real life?

Male Youth: No.

SOURCE: InRealLife YouTube Channel https://youtu.be/71pXYIwYpaU

“InRealLife Extended Trailer”

August 15, 2013

Taylor Owen: And I watched it again recently and was struck by a lot of... Almost it is a time capsule, was remarkable, both the people you interview and the way you talk about the technologies, and it really was capturing a moment. But I'm wondering why you felt it was so important to feature that age group, that time, using those kinds of technologies, and what brought you to that... the urgency of it, I guess.

Beeban Kidron: I've already said, I was at the time... I had set up this charity, it was called Film Club, it's now called Into Film, and I was going in and out of schools and in and out of the lives of children, and around 2011, 2012, I noticed something different about that community. And it was a funny thing. It was a silence and a restlessness. And it was that move, actually from that chatter, that you associate with young teenagers, into actually something that was the bright light in the poking of the phone, and it was the time when a smartphone became a price point that either a parent would buy it for a teacher or the parent would upgrade and give their old phone to the child, and it felt like overnight. Now, when I first started thinking about that, I was really much more interested as a filmmaker in the concept of what it was like to be both here and there, and then what was there? Did we understand? And people often asked me, was it about my own children? It wasn't. My children are a little bit older, adult now. It was not anxious mother stuff, it was more philosophical. It was, "What does it mean to be a child and always have another there and there?" Was my question. That was the essay question. It wasn't until I had spent, what I would probably have to admit, was several hundred hours in the bedrooms of teenagers that I actually-

Taylor Owen: Which is a terrifying prospect, as you see in that movie.

Beeban Kidron: An absolutely terrifying prospect. It wasn't until the middle of that, that I realized that, that there was something else. And there's something else that first bothered me was that absolute lack of understanding that this was a hardwired, privately-owned, constantly saved, upgraded, designed world that had a purpose. Yeah?

Taylor Owen: Yeah. And those are truisms now, right? I mean, we know those things now, but then that wasn't the debate, was it?

Beeban Kidron: It wasn't the debate. It wasn't understood, and as you will remember from the film, the thing that got a bit obsessive was me asking people where's the cloud, and they started pointing up at the sky, and only very few of them were doing it in any kind of ironic sense. I mean, they literally didn't know. And it's interesting that in the aftermath of making the film, I made this bizarre promise that any teacher who asked me, I would go into their school and talk to the kids about what I had seen and what I had done and so on, and I used to show that clip of a Facebook server firm, which is the endless, endless, endless, endless shot of blue blinking lights, and all the servers. And when I talked about that with the kids, it was like a chill going through them and they go, "But Miss, what would happen if they drop a bomb on it?" And I would go... And it was surprising how often they actually use that exact thing, exact words. Sometimes they meant if someone destroyed it, set on fire and stuff, but mainly they said, drop a bomb on it.

Taylor Owen: Which is about as physical a thing as you could imagine, right? They're getting at the tangible aspect of it.

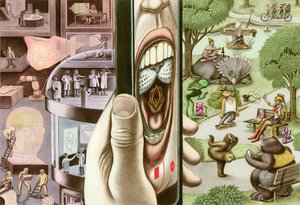

Beeban Kidron: They're getting at the tangible exactly right. And as they said it, I go, "Oh, don't worry. They got it in 10 other places and 100 other places. They copied it." And you could see in the room that energy dissipate and emotionally those children understood what it meant to be fragmented, distributed, and not in control of what was, and what they understood as their intimacies, their actions, their mistakes, their pleasures, their loves, things that were precious to them. So there's the kids in the bedroom, but that second piece of how the infrastructure was hidden was really really important. And then of course you have the third part of it which is me crisscrossing America, asking all the experts, and they're going, "Don't worry. It's a democratizing technology." And many of them have had to face up to how long they asserted and were blind to... and I would probably say willfully blind to a societal problem at scale. Yeah. And you watch them fall. Like the bottles in the song, they fell off the wall, one by one, by one, and finally had to admit, "Do you know what? You cannot pursue this framing of, all users must be equal. It's a democratizing thing. There will be no gatekeepers." Each one of those things is fundamentally flawed, and it's that all users will be equal that actually changed my life, because it was in a moment, and it's in my interview with Nick Negroponte, when we're in New York, and he said this, "All users will be equal as a good." Yeah. And he meant it, and it sounds good. But actually I suddenly had this thing, which is, if all users are equal, then de facto children are being treated as adults. And that is a problem. And it was a bit like the movies. I'm sitting there in New York and I go, rewind, through my brain, all these hundreds of hours I spent in kids' bedrooms. And I realize, bottom line is most of the problems kids have online are a direct result of the fact that the demands of them are adult. They are assumed to have the maturity and the capacity of adults, and that doesn't matter whether it's nudge technology, or adult content, or misinformation, disinformation, you name it. In absolutely every way we are demanding that they are adult at ever earlier ages.

Taylor Owen: Yeah. I mean hearing that in retrospect makes perfect sense. So I was going to ask you what that transition point was from making the film to becoming, I mean, an activist and a policy maker, which is a different world, there's no question. But that makes perfect sense, right? That the flattening of the internet, treating everybody as equal, being layered onto a society where we actually don't treat everybody as equal. That's the whole point of living in a society with rules and norms and laws, is that we treat different groups of people in different ways for a good reason, that have evolved over centuries, right? Children are different than adults. And if the internet treats everybody the same, then that is harming something at some point, right?

Beeban Kidron: Yeah. And actually, even if we take the harm language out, because I... and there are absolute harms and I'm absolutely prepared to talk about that, but actually it's a violation of rights. It's a acceleration of risk. It's a diminution of the journey to maturity. It's against our culture. If a kid in our environment... If there's something unpleasant, what do we do? We put our hand over their eyes.

Taylor Owen: Yeah.

Beeban Kidron: Yeah. If they don't understand, we explain it differently. Yeah. If they're not ready yet we say, "Hey guys, next year, you can do this." I mean, we are not talking about things that we don't know about. I think that the forces that exist have done their absolute best to sow confusion about what's at stake here, but actually the bizarre thing is how many people say, "I don't know about technology." And I turn around and go, "Yeah. But what do you know about the journey from zero to 18? Is your kid ready at six, at eight, at 10, to be shoved out the door with no instructions, and the oncoming traffic?" And they go, "Oh, don't be so ridiculous." And I go, "Well, why in plain sight, have we allowed a system so important, so central, so powerful, to do just that?"

Taylor Owen: Yeah. You mentioned going to speak to classes and I do that a fair amount as well, and about this topic, to teachers and to students, and I am constantly blown away by the concern of teachers, in particular, and I was a high school teacher. Because they are on the front lines of bringing kids through that transition in life, right? And they know how kids think and function, and what they need to learn at different phases. And they know that's being...

Beeban Kidron: Eroded.

Taylor Owen: ... eroded, or circumvented, or whatever it is, by the way they're consuming information. And there's a disconnect between how they need to evolve as humans and how they're engaging in the digital world.

Beeban Kidron: So it's really interesting because I referred already to the fact that I made this crazy promise to go to any school that invited me. The reason I made that promise is because after I made the film, every time the lights went out... and it didn't actually matter whether I was in Rio de Janeiro, Madrid, Manchester, London, New York, it didn't matter, whenever the lights went up, the room was full of teachers. And the reason that the room was full of teachers I realized, is because a parent has one or two or three kids, and they make excuses, and they've got a narrative that it's okay, but Billy is a bit upset, but Sophie isn't, or whatever their thing is. Teachers see 30 kids, and then another 30 kids, and another 30 kids throughout the day, and they were like an early warning system. They were the canaries in the coal mine. They knew that there was something seismic happening and they didn't know what to do about it. And that's how that moment came, and I did literally go to hundreds of schools over a period of 18 months in many countries, sometimes being translated, and it was a life-changing experience. And as you've already pointed out, I actually stopped making films, and I had my own kind of, as my father used to call it, a pain of consciousness. Whether or not you really want to understand, you have seen something that you cannot go back on, you cannot live with. And I feel that this is a profound social injustice at a generational level to at least one in three people in the digital world, right? This is not a minor matter. So for a billion people, this is a problem.

Taylor Owen: Right. Let's talk about that problem a little bit. One of the things that really strikes me about it is that the scope of harms people are talking about potentially, are so vast, right? So clustering something like childhood brain development and mental health, along with exposure to different kinds of content, and bullying online, and general social pressures that emerge in the system, those are all very different things. And I'm wondering how you parse that. How do you get at what the root of the harm is, and what we should really be going after here?

Beeban Kidron: So that's a great question. And just to be irritating, I say, we don't go after the harm, we go after the risk, right? So you start at the other end of the tube, and it took some time to work this out. I absolutely agree with you that if you lay down all the potential harms in front of you, you have, on the one hand, childhood, yeah, you have, on the other hand, being a human being in the 21st century, and on the third hand, you have some really egregious attacks on children's rights, liberties and so on. So how do you put that all together? And what I try and look at, with my colleagues at 5Rights and my colleagues in the House of Lords, is really about why we allow the level of risk in this system, in a way that we actually ask people to mitigate in all other systems. And so, what I mean is, if you are a drug manufacturer, first of all, you have to go through a process, and then you've also got to look and see that actually someone who is under the age of 12, who's got a different body weight, might have a smaller dose, or indeed a different medicine. And I could play that game, ad infinitum, in all these different arenas, we'll actually go, "What are we trying to achieve? Who is in the room? How do we make sure that what we're trying to achieve is suitable for the person in the room?" That is, a risk analysis that goes on.

Taylor Owen: And we do that all the time in all sorts of different things.

Beeban Kidron: All the time. And we do it legally, we do it in regulation, and we do it culturally.

Taylor Owen: It's so funny you say that. Just this morning, there was a story in Canada about how our federal product safety regulator has forced a recall on ring door cameras, the ones that take all the video, but because they catch on fire sometimes, not because they're collecting this mass amount of data about society and being used by police departments to survey us, right? So we know how to do this, we just don't do it for these things, right?

Beeban Kidron: Precisely. And so, one of the things I often say is, not all kids come to harm from all risks, but you know what? If we were sending washing machines and every one in 300 burst in fire, we would have recalls. So why is it that it is okay to have a poisonous system for kids in this arena? And the answer is, of course, it's not, and the other answer is, this is not a mature industry, and it is maturing, and we better get to it quick. What is unusual is how pervasive it is across so many things, and how powerful we have let it become, and how little we have understood about how it is affecting children and childhood. But to be absolutely really precise in answering your question, that actually a lot of the work that we're currently doing, whether it's work on creating a standard for age appropriate framework, for terms and conditions, whether it is a child focused data risk assessment, which we're doing with the regulator here, that actually the very first starting point is going, "What is your service? What are you processing? What are the potential outcomes? And does it affect these things?" And some of the things that you might consider in that... and obviously we've got a long list that is turning into hopefully a new normal of the questions you'd ask. But you had a very long list earlier in your question, didn't you? And so you go, "Will it affect their emotional development? Will it affect their sleep? Will it make them vulnerable to someone seeing their real time location? Will it introduce a stranger adult to an underage person?" Et cetera. I mean, you can keep on going, and once you start asking those questions, you go, "Well, that's not very appropriate. Is it? How about we take out that particular risk?" And one of the most misunderstood things in this arena is that actually many of the things that we need to do, to give kids a more equitable shout in this arena, is actually switch things off. It's a lot less about new technology than it is actually about taking them out of the business model here and there. Don't target them with ads. Don't offer friendships suggestions. Don't network them in certain ways. It's actually a lot less difficult to get some of these things, and to be absolutely clear, I am never going to say that it should be 100% risk free. It never will be. It never could be, but actually if you take out the industrial level risk, which is, let's face it, a harm unrealized, yeah? And leave us only with the realized harms, once that risk has been dealt with in a reasonable and proportionate manner, then we have a fighting chance to deal with some of those harms because it wouldn't be the list that you gave at the beginning-

Taylor Owen: No. That's right. And I want to talk about that sort of policy leavers, I guess for a lack of a better term, in a second, but one last thing on this, I mean, so I have a seven year old son and I write and complain a lot about social media platforms and all the things you talk about and care about too, and one of the things he likes more better than anything is these hour long origami making videos on YouTube. I mean, they're incredible. He spends an hour watching these things and duplicating them, and it's truly remarkable to watch, the brains working in that creative way and follow these instructions and all of that, but I keep coming back to why on earth should I have to embrace and use the entire problematic infrastructure and level of risk, as you say, of the YouTube ecosystem in order to get that benefit? And I just wonder how we've gotten to a place where we're willing to take all of those chances and expose ourselves and our kids to all this vulnerability for that benefit. The trade-off just seems bizarre, doesn't it?

Beeban Kidron: I think it is, and I mean, I think it's not entirely separate from other decisions we have made as a societal level to valorize efficiency, to exploit tiny margins of production benefit, to not worry about the societal impacts of our supply lines and our behaviours, either on people or planet. So I think we've got into a god of efficiency, a god of growth, a god of profit and in the wake of all of those things, we've accepted some sort of infrastructural and business practice, which is not in everybody's best interest. I mean, it just simply isn't. And I only talk about kids in this policy area, but I think you don't have to get very far down the road as an Uber driver to work it out also. So-

Taylor Owen: We made some trade offs here, right?

Beeban Kidron: So I think that talking at that level, that is, that's the fight. What you have described is the fight. It should not be that the entire attention, future, potential, educational outcomes that position in the... you name it, is at stake for watching a video when they're seven. And obviously that's a bit of an exaggeration, but why do we have to have all of that infrastructure on the shoulders of a child? And I think that we got to change that. And the company is either got to do it because we force them, or we've got to be prepared to pay a little bit more for some content for children, or we've got to actually look at our values more profoundly and say, "Actually, this is out of kilter. This is out of balance. And we need to start over at least with regard to this demographic."

Taylor Owen: Yeah. And so you've been working at figuring out how to start this over or hit reset here a bit, and you're actually in the policymaking apparatus now, formally, right? I mean, you sit in the House of Lords, you can propose policies. How have you seen that government perspective on this change over the... since you've been there? I mean, it's five or six years now and British government, with all of their online harms work, and feels like they're stepping into this space in a serious way. How did that change come about? What do you think?

Beeban Kidron: Yeah, I mean, that's a huge question. And if I say... Let me answer it in a couple of different ways. So the first thing is, to think at systemic level and go, "Okay, let's at first prove that childhood is everywhere and that the digital isn't exempt." And that's been a very long journey, but it's so-

Taylor Owen: And that's like a first principle issue, right? Because if you don't have that, you can't do anything on top of that differentiation.

Beeban Kidron: Exactly right. And that was the basis upon which I do a lot of my... when you say leavers, is to look at where the leavers are and you look, 196 countries do embody children's rights, do report to the council, do dah-dah-dah, and at least now they're going to have to report on the digital world, and we have set out some pretty tough things or... So that's the first thing. Then, that put me in mind when all of you are listeners, will be aware of GDPR and the data protection regulation, but when it came through our House... and I looked at it and it said, "Yeah, kids need special consideration." And then you look for the other bit and you go, "Oh."

Taylor Owen: In what way? Yeah.

Beeban Kidron: "In what way?" Yeah. And also there's the whole debate about 13 and 16, and we've created a situation where, in the rest of the world, a child is someone under 18 and in the digital world suddenly you're supposed to be an adult at 13.

Taylor Owen: Can you explain that before we move on. I mean, that always baffles me. Is that just because the companies have set within their terms of service agreements that age, totally arbitrary?

Beeban Kidron: It's actually to do with the American Law COPPER, which is a piece of consumer law that was actually researched last century, came in 2000, and was before any of the services that kids are currently using, and it's completely out of date, and it's about advertising, and has been exported as a norm around the world, and it's very very problematic in a number of ways, including that it's very poorly adhered to and it also relies on the concept of consent. And I am sure that we could spend an entire hour on the concept of consent in the digital world, which we won't, but... So in every way, not fit for purpose, yeah. Hopefully a Biden government will be tackling this in short order, but it has meant that de facto adult begins at 13 in the digital world. So all of these sort of very ludicrous things, and I'm looking at the legislation and I go, "Okay, let me put together this idea that they need special consideration with the ideas that they have rights and needs," yeah? "With the idea that they are, in fact, a child until they're 18," and then with a very distinctive and important idea, which is, we must stop talking about the services that are directed at children because children spend most of their time in services that are not directed at them, or indeed they may not actively been using. So when you have your discussions about facial recognition, about biometric data, about misinformation, disinformation, all of these things are affecting children, and yet every piece of policy is about YouTube kids.

Taylor Owen: Right. These limited niche products that are not really widely used. Yeah.

Beeban Kidron: No. And to be honest, some of them are good, some of them are bad, but that's not where the problem lies. Yeah?

Taylor Owen: Sure. That's not the point, right? Yeah.

Beeban Kidron: It's not the point. So putting all of that together, I introduced amendment into the bill that is now found the light of day in law, and it was at one point called the Age Appropriate Design Code, it's now called the Children's Code. It's the first of its kind, and it basically sets out what it means to give children special consideration and a high level of protection, and it won't be lost on you that because it is data law, it actually gets in to the system. Because if that's where the action is, if that's where the money is, if that's where we're... follow the data as it were, that is where the solution is. So that was a very long way of... Even before you get to the harms piece of actually talking about leavers, you talk about first principles and rights, you talk about following the money in the data law, and I am very hopeful that the Kids Code will actually, like GDPR, spread around the world, and I know for a fact that there are several jurisdictions where it is currently under consideration and will be adopted in short order.

Taylor Owen: I highly suspect Canada will be one of those at some point. I can't imagine it not being used as a template.

Beeban Kidron: I stand ready to help anyone in Canada who wishes to take that action.

Taylor Owen: Be careful. It'll be like you're going to school offer. You may be on the tour of national governments, globally, so.

Beeban Kidron: All right. Happily so, because I think it's very profound because it deals with profiling, it deals with nudge techniques, it deals with terms and conditions. In the end, the minute that you start talking about this stuff, it starts being very quick to the same set of solutions.

Taylor Owen: Well, and same set of solutions across age groups too, frankly. I mean, that list of 15 changes in the code, or requirements in the code, are certainly all things I would like to have on my behaviour too, right? So it could be, this starts to normalize some of these things beyond just kids.

Beeban Kidron: I really appreciate that point. I mean, I make a special case for kids and I feel we have a higher bar of duty, and they require higher bar of representation because they can't represent themselves in this, but I would have to say, on the record, as they say, where I come from, on the record, that I'd be very happy to see that mirrored, and I very often think when I'm fighting for kids, that there's some of the things that I'm fighting for, that I would like as a citizen.

Taylor Owen: Yeah. Is there any risk in that? Is there any risk of focusing just on kids and then missing this broader structural surveillance capitalism argument? Or is it just starting there and then moving on?

Beeban Kidron: I think there's two things. One is, it's harder to resist for politicians and for the sector and so on. I think, secondly, we have more leavers around kids. I think that actually there's something else, which is, as much as I hate to admit it, this stuff takes time. And so the eight year olds, the kids who were eight, when I started, are 16, yeah. Very shortly, they'll be 18 and they'll be adults. And I think there's two parts of it. One is we're going to win with kids, and then those kids as adults, who have been treated better, are going to demand more. And I think that what you probably know from the sort of fantastically interesting conversations you've had across the piece, is one of the biggest battles has been to get civil society and politicians to care, to care enough. And what they do care about is the kids, and I see the work that I do as a twofold thing. One is, get the lived experience of children to be a whole lot better than it is. But in doing that, you are educating politicians, you are educating parents, you are educating children, and you are educating the tech companies to a degree about what it is we are asking for, what it is, indeed, we are demanding. And I think that that can only grow to be a more citizen based thing. But I think if you try and boil the ocean, we'd get nowhere.

Taylor Owen: Closing in here on the end... but this conversation ends up zeroing in on these tech companies, I think rightfully so, like you said. They've built the infrastructure. They benefit from the infrastructure. But the flip side of the failure, and I think some of the responsibility here, seems to be the ease with which our institutions have adopted those technologies. And I think the education system is a case in point, right? When COVID hits and we enter into pandemic, what do we do? We all jump on the platforms and start using them as teaching tools. And there's case, after case, after case of that being the issue. The healthcare system is the same. And so, I guess, yes, we need to put pressure on platforms and the technologies themselves, but do you see that broader shift is necessary too? We just start really thinking more democratically and deliberatively about what tools we use, and when, and why, and who builds them.

Beeban Kidron: I mean, 100%. And I've been actually having a battle since the beginning of lockdown here in the UK, because we've got this astonishing thing where the kids get sent home, and the whole focus is towards mandating schools to do online learning. And I'm sitting there as a virtually lone voice because even many of the people who campaign with me in different areas were, "Don't talk about safeguarding now. Don't talk about safeguarding." And then I get a tsunami of teachers saying, "This is what happened in my Google Class yesterday. This is what happened in my Microsoft Team." We are in a situation, yeah? Where school for a child is no longer safe, school for a teacher is no longer safe. And I have to say the first person who I saw in real life during the pandemic was a teacher who I went to visit, who had had such horrific things happening in her school that I went to help her, but she turned around to me and she said, "I dare not set homework because I do not want to be the person to send those kids online into a world that is so unsafe and so unsuitable. And so now I'm failing my kids education." Now that is a sort of a manifestation of what you're saying. And absolutely, at a societal level, here in the UK, I think we're about to see a horrible attempt to sell health data through the NHS, to the big companies. I think we will see that, and whether that's a battle we can win. I don't want to be dystopian about this because I'm fundamentally an optimist. I think this is all about uses and abuses. This is marvellous technology. It does wonderful things. Look at us speaking here. This is an absolutely incredible thing, but we have to have limits and we have valorized efficiency and theoretically low price over people, democracy, planet, and our kids' future. And that is not a good deal. Yeah? And the handful of people who are winning out of that deal are winning and winning, and even during the pandemic, they got a whole lot richer, and the people who are losing, are losing and losing. And it is as broader issue as we choose to define it. I mean, there is virtually no edge to it. And that's why I actually think we need regulation, we need standards, we need consistency, that we need to decide what kind of communities we want to live in, and we've got to say, "Do you know what? No growth at any cost." The god of growth must be killed.

Taylor Owen: Yeah. And we can manage. I agree with all of that, and it strikes me that you started this within real life, looking at the problem of a device in a kid's hand, and now we're talking about the nature of global capitalism, the future of democracy, right? This is expanded into a much broader challenge and agenda, and I wonder if you see that, that's the direction of it, I guess?

Beeban Kidron: You know what? It is, but the way that I choose to frame it, and the way that I think is important to frame it, is, it's their future democracy, and they are the forgotten. Yeah? People think that we think about this, but they don't. And all of these debates are centred... It's either what we're giving them to inherit. Yeah? Or it's what we're failing to do right now. But they are the forgotten demographic, and I don't have to look further than children to be in the centre of all these debates because they are endlessly, endlessly forgotten in the digital world.

Taylor Owen: That was my conversation with Baroness Beeban Kidron.

Big Tech is presented by the Centre for International Governance Innovation and produced by Antica Productions. Please consider subscribing on Apple Podcasts, Spotify, or wherever you get your podcasts. We release new episodes on Thursdays every other week