The application of artificial intelligence (AI) to the evolution of war is advancing quickly. As recent conflicts in Ukraine, Azerbaijan, Syria and Ethiopia demonstrate, autonomous and semi-autonomous drones are becoming a cheap and easy tool for attacking conventional targets. The threat of drone swarms overwhelming Canadian military installations alongside cyberattacks on critical infrastructure represents an increasingly likely future.

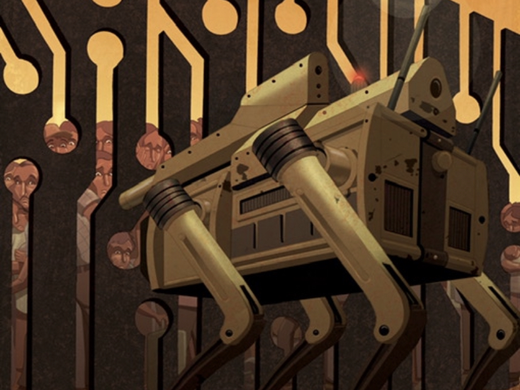

Beyond the exaggerations often seen in science fiction, it is important to understand the inherent dangers in the application of AI to the battlefield. Together, the proliferation of lethal autonomous weapons systems (LAWS), in the form of military drones and robotics, and the rise of China as a major world power have begun reshaping the global order. Active engagement by Canada and other North Atlantic Treaty Organization (NATO) member countries in discussions on regulating military AI could be key to managing this changing geopolitical landscape.

What is clear is that we are living through a period of transition between two epochs: an industrial era characterized by the dominance of the United States, and a new digital era characterized by the rise of a multipolar global order. Since the end of the Second World War, the global order has been held together by a web of conventions, treaties, patents and contracts grounded in international law. This “rules-based order” appears to be winding down.

Determining the guardrails in the development of military AI is critical to managing this new reality. Like the steam engine and the internal combustion engine, AI is a general-purpose technology with a capacity to alter the pace and scale of war. Unfortunately, the laws of war regulating the use of AI — in terms of both the conditions for initiating wars (jus ad bellum) and the conduct of AI in war (jus in bello) — have yet to be defined.

The need to regulate AI as a weapon of war is obvious: As military drones and robotics become cheaper and more widespread, they will provide a range of state and non-state actors with access to LAWS. In fact, many states today are already well-advanced in the deployment of LAWS. Notwithstanding the fact that Canada and a significant number of other countries support legally binding treaties that would ban the development and use of autonomous weapons, most major military powers see significant value in weaponizing AI.

For countries such as China, Russia and the United States, a lack of mutual trust remains a substantial hurdle in pursuing collective arms-control agreements. Nonetheless, the dangers associated with the unchecked proliferation of military AI are clear. Indeed, the very algorithms now driving mundane industries such as children’s toys, social media, music streaming and autonomous driving networks are also underwriting the evolution of LAWS.

Unfortunately, AI remains a moving target. Unlike nuclear proliferation or genetically modified pathogens — developments whose potential to wreak havoc is easily contained — AI is essentially software. A “killer robot,” for example, is not the outcome of a specific type of innovation. Rather, it is more akin to a collection of technologies — knitted together by ever-advancing software algorithms.

Fortunately, this is not the first time the world’s nation-states have been confronted with new technologies impacting global security. Despite the many divergent views on AI and its weaponization, past negotiations on weapons of mass destruction can serve as a basis for future treaties — particularly in defining the rules of war. As NATO’s Advisory Group on Emerging and Disruptive Technologies observes, Canada and its allies should seek to promote and establish multilateral dialogue on a comprehensive architecture for the development and governance of AI.

Throughout the Cold War, confidence-building measures that included regular dialogue, scientific cooperation and shared scholarship were critical to managing geopolitical tensions. In the aftermath of the Second World War, the world’s most powerful countries — the United States, Britain, the Soviet Union, China, France, Germany and Japan — oversaw global governance on nuclear weapons, chemical agents and biological warfare.

Then, as now, the world must act collectively to govern a new generation of highly advanced weapons of mass destruction.