The COVID-19 pandemic has changed the contours of the credible. Three months ago, who would have believed that half the planet would be on lockdown? Or that some countries, like the United States, would be seeing the worst unemployment since the Great Depression? Or that the people of Czechia (and, later, others) would make enough masks for all their fellow citizens in mere days to comply with a mandatory government order to wear masks in public? What once seemed impossible has, in many cases, proven possible.

The list of dramatic, unexpected shifts in our online behaviour seems endless. Within five days, from February 20 to 25, the top ten search terms on Amazon switched from the usual suspects like “phone cases” to coronavirus-related items in countries such as Italy, the United Kingdom, United States and Germany. Bricks-and-mortar shops have pivoted to online sales, Canadian provinces have legalized the sale of alcohol online, and in early May, the e-commerce platform Shopify became the most valuable publicly-traded company in Canada.

Social media platforms have been sources of surprise as well. In recent weeks, digital platforms have shared more data for research, taken extensive responsibility for content and moved quickly to adopt official institutions such as the World Health Organization as the trusted sources for information. These swift developments remind us to be skeptical of company rhetoric and ambitious in our visions of what a positive internet could be. This pandemic is revealing what is feasible.

These companies have long been known for their strenuous defence of freedom of expression. Although they employ content moderators and have extensive policies, they have typically reacted rather slowly to combatting forms of false information, even those with demonstrable harms, such as anti-vaccination content.

Their reactions were far faster with COVID-19. Companies rapidly updated their content moderation policies and seemed to understand that they bore some responsibility for content. “Even in the most free expression friendly traditions, like the United States, there’s a precedent that you don’t allow people to yell fire in a crowded room,” said Mark Zuckerberg, Facebook’s CEO, in mid-March. Instagram started to delete false content that used the COVID-19 hashtag and substituted information from the World Health Organization (WHO) or US Centers for Disease Control and Prevention (CDC). Various types of advertisements were curtailed to prevent price gouging and scamming. In late March, Twitter even decided that prominent politicians’ tweets could be removed, and deleted two tweets by Jair Bolsonaro, the Brazilian president, for praising false cures and spreading incorrect information.

After long implying that the best information would drift to the top, platforms decided that they had to choose the most reliable sources for their users. By late March, anyone logging into Twitter would see buttons providing information on the COVID-19 virus from the WHO and the CDC’s equivalent in their own country. Results for Google searches related to the disease now prominently display authoritative information about symptoms and the number of cases from Health Canada and answers to common questions and tips on prevention from the WHO.

By late April, YouTube was using international health institutions as a basis for their content moderation policies around COVID-19. YouTube decided to delete any content providing “medically unsubstantiated” advice around the virus, meaning anything contradicting WHO guidelines. Within days, YouTube had removed a channel by David Ickes, who was falsely linking the outbreak to 5G technology. The question arises why platforms had not previously moderated similar, unsubstantiated claims related to other diseases, such as cancer, measles, and malaria.

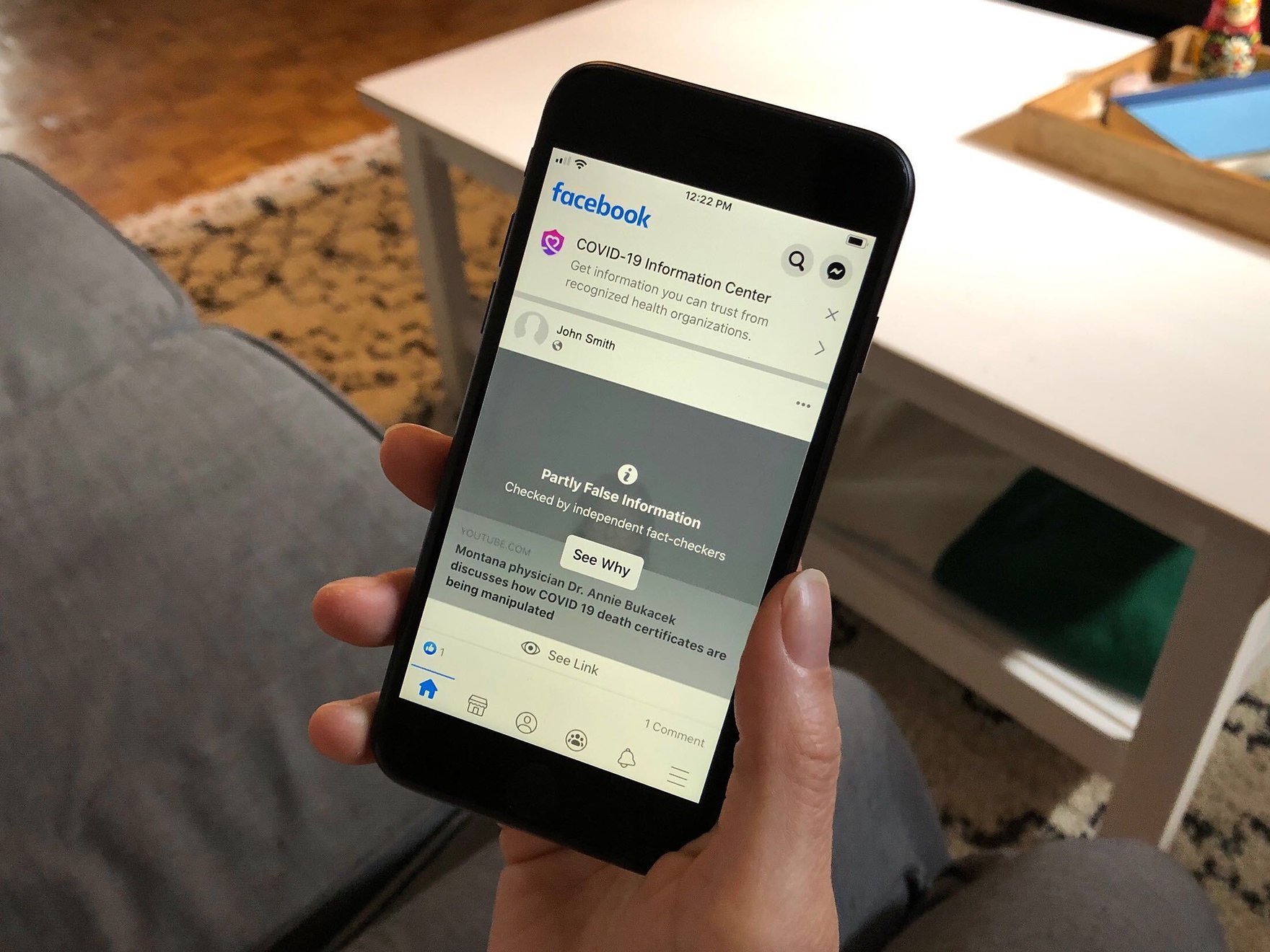

Companies have also altered their policies on correcting false information, as it became clear that conspiracy theories online were having direct offline consequences. UK mobile phone network providers found themselves battling the destruction of 5G towers after online conspiracies linked the towers to COVID-19. On May 6, Twitter announced that anyone tweeting about those theories would be directed toward UK government information about 5G. On May 11, Twitter followed what Facebook had done in mid-March and announced a labelling scheme for tweets making “misleading,” “disputed,” or “unverified” claims about the coronavirus. Some activists had long called for social media companies to provide corrections like this and, indeed, to go further by notifying everyone who saw the post. Companies have now met these demands part-way by acting on the harms of coronavirus-related health disinformation. If these interventions prove popular and successful, might these measures be expanded to encompass all health information or, even, to include other categories?

Companies have also shared some data surprisingly quickly, given their previous reluctance to involve researchers or the 20-month process to provide Facebook data to vetted researchers.

Facebook and Google are working on a project with researchers at Carnegie Mellon to track COVID-19 symptoms. Google is also providing community mobility reports based on their tracking of their users “for a limited time, so long as public health officials find them useful in their work to stop the spread of COVID-19.” These efforts are welcome: theoretically, we need all the help we can get to combat this disease. Yet, why can companies share data more quickly now than before? And why should companies be allowed to decide when they start and stop providing public data?

In the last few months, social media platforms have moved far more quickly than ever before to enact policies that their employees and executives believe will save lives. This seems the moment to begin a new type of conversation — about how platforms might design more permanent content moderation policies around certain types of information, specifically, information that can mean the difference between life and death. What fundamentals need to change at a time when anti-vaccination content might mean that a coronavirus vaccination can never succeed, even if we are fortunate enough to develop one swiftly?

Indeed, many of the underlying problems of platform governance remain. Twitter’s new policy on the 5G conspiracy theory came too late to stop it spreading to Canada, where arsonists have attacked several cellphone towers. Doctors in many countries around the world have signed a petition calling for companies to do more to combat dangerous disinformation. The Reuters Institute for the Study of Journalism examined 225 pieces of misinformation around COVID-19. Eighty-eight percent of these pieces of misinformation were spread on social media, while only nine percent appeared on television and seven percent on other websites. Just last week, a conspiracy video that regarded the coronavirus as a “plandemic” spread rapidly on YouTube, partly through coordinated linking by QAnon groups (a far-right conspiracy theory community) on Facebook. Reform on one platform alone cannot solve these problems.

What the crisis has already shown is that companies are willing to act. And, contrary to what they previously sometimes insinuated, they can act quickly and broadly. This laudable willingness to address the pandemic is evidence that companies can do better, and that we are within our rights to demand it.