The commercial landscape is experiencing seismic shifts from the rapid evolution and proliferation of large-scale language models and generative artificial intelligence (AI). These new technologies are a veritable iceberg, with much of their potential and capabilities still unknown. Unresolved questions, including ethical ones, abound. Yet, it is in the arena of open-source intelligence analysis where AI’s transformative power promises to be most profound.

Unlike traditional research, intelligence synthesis entails the aggregation of data gathered from the public sphere — whether through observation, access, or purchase — and the application of a range of analytical techniques. The result is a trove of information with extensive potential applications. From establishing an immediate understanding of a situation through geospatial data, to tracking trends on social media, the possibilities are immense. But the speed and precision of data amalgamation, the validation of sources, and the generation of hypotheses for early warning or emerging trends have, until now, been tethered by the limited capacities of individual analysts.

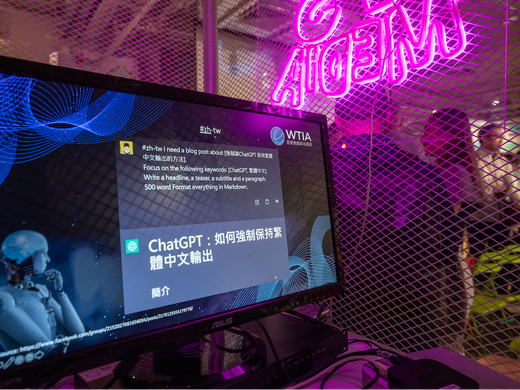

The dawn of generative AI and large-scale learning models marks a significant change in this field. OSAINT — short for open-source AI intelligence analysis — is an approach that applies rapidly emerging AI tools and models to streamline and improve analysts’ tasks. OSAINT promises to automate the swift deployment of data-gathering tools — such as Web spiders, scrapers and various sensors — that quickly notify analysts of new data and efficiently channel it into data “lakes” for further analysis. OSAINT AI workflows will help to alleviate the monotony of analysts’ work by summarizing, extracting and organizing data. That will allow analysts to quickly establish the confidence and reliability they need to make assessments. Additionally, AI may allow for automation of aspects of the assessment process itself and generate analysis for diverse applications. The greatest power of these tools may lie in their ability to structure and translate data into a format customized to individual decision makers, enabling rapid, informed decision making.

OSAINT is poised to redefine how public information is collected. In the intelligence agencies, publicly available information (PAI) comprises any observable, accessible or acquirable data. Historically, the collection of PAI has been plagued by the cumbersome process of custom-crafting collector programs that require constant adjustments. Just as word processors assist authors in sentence completion, tools like GitHub’s Copilot enable software programmers to automate code writing. A lone programmer can handle multiple concurrent code-writing processes. This proves especially beneficial in addressing the challenge of ever-changing data sources, which often necessitate time-consuming code rewrites. By leveraging AI, programmers can generate multiple iterations of code snippets to anticipate alterations in data sources or swiftly adapt to changes.

Moreover, tasks previously deemed tedious and time-consuming by human analysts, such as pattern recognition in images, audio or video, can now be automated at a fraction of the cost, in multiple languages and formats. While these capabilities were once the exclusive domain of government intelligence agencies such as the US National Security Agency and the National Reconnaissance Office, they are now affordable and commercially accessible to anyone with a credit card and a computer.

While OSAINT might not alter the essence of intelligence analysis, which involves crafting multiple alternative narratives and assigning confidence levels to each, it does greatly augment analysts’ capacity to collect and produce intelligence. OSAINT also has the potential to transform good analysts into excellent communicators, addressing one of the main hurdles in intelligence — the challenge of instilling confidence in decision makers who must base their decisions on intelligence data.

In the current climate of escalating geopolitical tensions and crises, robust intelligence is a key to informed decision making in government and business. OSAINT holds the promise of revolutionizing and democratizing risk assessment and opportunity identification.

However, this technology is not without risk. Ethical and operational guardrails are needed. Current problems with these models, such as their propensity to “hallucinate,” must be addressed. Companies such as Palantir have already begun strategizing and developing task-constrained AI to prevent it from becoming an opaque tool within the intelligence value chain.

AI is also vulnerable to hacking. As large language models (such as the generative AI chatbots) rapidly permeate processes in which they bring efficiencies and cost savings, they inevitably become targets for hackers. The new malware of our digital age may well be the corruption of these algorithms and their foundational data. As such systems become increasingly integrated into our economy and society, caution is imperative. Obviously, that is particularly important when it comes to consequential decisions by government, security agencies and militaries.

Just as open-source intelligence — OSINT — and social media intelligence — SOCMINT — became standard-bearers of the field in their time, OSAINT is poised to become the new norm that underpins risk management.

In effect, the advent of OSAINT represents a pivotal point in the field of intelligence and risk analysis. But it’s also a Pandora’s box. As a recent internal document from Google suggests, AI platforms’ greatest superpower is that they are open-source. That makes this superpower available to all — governments, corporations, individuals and criminals. Intelligence at the speed of the internet introduces a myriad of ethical questions, not all of which governments will be able to contain or regulate.

Every revolution has its casualties. It’s crucial to set up safeguards that ensure the potential harms of utilizing OSAINT do not overshadow its benefits. One promising approach might be the use of task-specific AI. That specificity could ensure that OSAINT’s integration into assessments would be understandable and operating within human-level boundaries. Additional safety measures, such as creating fail-safes that require human intervention and limit autonomous operations, particularly when significant implications are at stake, could also be crucial. These measures could apply in situations ranging from denying construction permits due to environmental concerns to preventing the greenlighting of potentially lethal actions.

The challenge lies in the fact that innovation thrives when it supplants existing methods due to its cost-effectiveness. There’s no regulatory measure that can restrict market forces without bearing a substantial political price. That fact represents both a risk and an opportunity — hence, coming to terms with, and quickly adapting to, the reality of living with OSAINT might be the best approach.