“Impossible to monitor and manage.” Those were the words spoken by the national security and intelligence adviser to the prime minister, Jody Thomas, in a recent interview on CBC radio. Thomas was referring to the tide of false information that courses through our information environment. She might have added: “impossible in a democracy.”

In July 2021, the Canadian Security Intelligence Service (CSIS) published a report meant to serve as a guide to understanding the foreign interference threat. Here is what CSIS said, just weeks before the September 2021 federal election: “A growing number of foreign states have built and deployed programs dedicated to undertaking online influence….These online influence campaigns attempt to change voter opinions, civil discourse, policymakers’ choices, government relationships, the reputation of politicians and countries, and sow confusion and distrust in Canadian democratic processes and institutions.” It was a broad canvas then, and remains so in 2023.

An important first step in understanding the current nature of the threat, and in coming up with response measures, involves definitional rigour. We need to be precise in what we are talking about, and we are not there yet.

The online influence campaigns of concern to CSIS can take three distinct shapes: disinformation; misinformation; and malinformation.

What separates these categories of false information? Key are the intentions and actors behind them.

Disinformation involves deliberate falsehoods generated by adversarial state actors and often deceptive means. The intent, simply put, is to do harm to a target state and society by polluting an information environment with deliberate untruths.

Misinformation also involves untruths. But the harmful intent factor is missing. Misinformation involves the purveying of false information by any provider, not just a state actor, that is not a deliberate untruth.

Malinformation operates in the grey zone between disinformation and misinformation. Malinformation can involve state actors as purveyors. It brings us back to the concept of harmful intent. It uses the deliberate torquing of misinformation to amplify its impact. Malinformation is a very close cousin of disinformation as it is deceptive and aimed at capitalizing on falsehoods for some defined, nefarious purpose.

Out of this definitional soup emerges one clear feature. Disinformation, properly defined, is the national security threat. Malinformation trails its coat behind disinformation.

But misinformation is what we do to ourselves. It may be false — the product of ignorance, conspiracy theories, malice directed at elites and institutions, or political polarization — but we must learn to live with it in a democracy. Fundamentally, in a free society, people have a right to adhere to false ideas. As the CSIS director David Vigneault has reminded Canadians, there are “awful but lawful” ideas. That freedom is part, like it or not, of the long-term experiment of democracy, now affected by the disruptive impact of technological innovation. Misinformation is an organic part of democracy. The tools with which we combat it must also be organic to democracy.

Disinformation, in contrast, is an external, alien threat. Different tools must be imagined and developed to deal with it. The conflation of disinformation and misinformation may be understandable (a falsehood is a falsehood; polluting the information environment is pollution), but any confusion of terms amounts to a serious problem as we build more robust protections for our democracy.

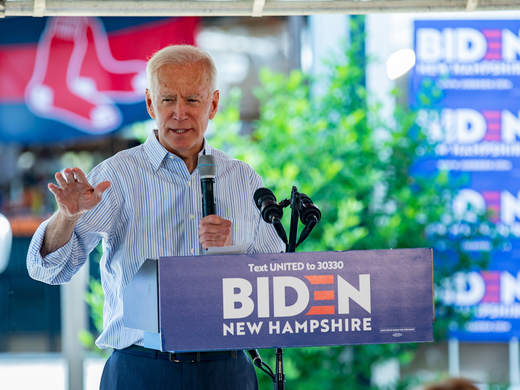

We can see signs of category confusion in two recent and prominent instances. One involves a dramatic statement made by the former leader of the Conservative Party of Canada, Erin O’Toole, before the House of Commons. O’Toole told members of Parliament (MPs) about what he had learned from a classified CSIS security briefing, given him by new ministerial directive. The directive, issued amid the media furor over threats made toward another Conservative MP, Michael Chong, requires that MPs be told by CSIS when there’s intelligence about a foreign threat against them. O’Toole’s statement provided further ammunition to opposition critics who’ve argued the government did too little to protect Canada’s democratic institutions. O’Toole himself was harsh, describing the government as being “willfully blind to attacks on our parliamentary democracy.”

In summarizing the briefing he received, O’Toole discussed what he called four categories of threats. He identified “misinformation” as being at the heart of three of these categories, involving the directing hand of the Chinese state, through the apparatus of the United Front Work Department (UFWD). He said that the UFWD created products of “misinformation” targeting him; amplified that misinformation through proxy groups; and used the WeChat platform to spread it. There is little reason to doubt the substance of O’Toole’s summary: the problem is his choice of terms. What O’Toole is describing is not misinformation, but rather state-sponsored disinformation.

A case can perhaps be made for transparently labelling disinformation. But any efforts to do so for misinformation would raise fundamental Canadian Charter of Rights and Freedoms protection and media freedom issues.

O’Toole’s fourth category of threats involved what he called “an active campaign of voter suppression against me, the Conservative Party of Canada and a candidate in one electoral district during the 2021 general election.” What, precisely, O’Toole was told about voter suppression is unclear. But the candidate mentioned appears to have been Kenny Chiu, an incumbent MP who was defeated in his Vancouver riding and whose electoral struggles were referenced in later media stories. The controversial report delivered by former governor general David Johnston, in his capacity as independent special rapporteur on foreign interference (he has since resigned), discussed the Chiu case and noted that, while there was online misinformation about Mr. Chiu during the 2021 election campaign, it “could not be traced to a state-sponsored source.” Again, the crucial distinction between misinformation and disinformation is at issue.

The second example of this confusion emerged in an otherwise impressive report issued in late May 2023 by the non-governmental organization Alliance Canada Hong Kong (ACHK), entitled Murky Waters: Beijing’s Influence in Canadian Domestic and Electoral Processes. The report mashes the terms together as a Beijing ”soft power” tactic, calling them “disinformation/misinformation.”

This led ACHK to recommend that the Government of Canada “hold social media companies and media organizations accountable for transparently labeling state officials, state-funded media, or disinformation/misinformation [emphasis mine].”

What is wrong here? A case can perhaps be made for transparently labelling disinformation. But any efforts to do so for misinformation would raise fundamental Canadian Charter of Rights and Freedoms protection and media freedom issues. That is not the road we should go down as a society.

But, as ACHK notes, there is a unique problem for the Sinophone community in Canada that opens up pathways not only for Chinese state disinformation but also for malinformation. This problem lies in the reliance of many in the ethnic Chinese diaspora in Canada on the Chinese-based WeChat communications platform and on the weakness of independent grassroots and local media sources to serve that community. ACHK’s solution is not, as has sometimes been argued by others, to ban WeChat, but rather to provide dedicated funding to local communities to help them develop independent information capabilities, free of foreign state sponsorship and intrusion. It’s a good concept, and points to a larger resolution for dealing with foreign interference and disinformation. It also, of course, applies to misinformation.

This bring us back to the comment by Jody Thomas, the prime minister’s national security and intelligence adviser: in a nutshell, disinformation cannot be eradicated by law or regulation. Naming and shaming perpetrators may put a temporary crimp on a foreign state’s influence operations. It may also be of value for public education. But authoritarian regimes will continue to practise information warfare regardless. We can, and should, kick out offending diplomats who design or try to deliver such programs. We must also accept that they will be replaced by others with similar missions.

Nor can we hope to rely on any sort of foreign influence registry to weed out disinformation. The cost of folding media organizations and media expression into such a registry far outweighs any benefit.

The best we can expect is that disinformation will be recognized for what it is, and that a low currency will attach to it. That hope depends on a number of things, including much better transparency around national security issues; access to an open information environment with multiple sources of authoritative information, beyond the control of any sinister foreign hand; and a willingness to recognize disinformation for the shoddy product that it is.

And yes, it is a shoddy product. Disinformation only works well in an information environment under the strict control of an authoritarian regime. We should not willfully exaggerate its impact, or reach for exaggerated tools to combat it, or assume diaspora communities will be its easy or willing victims. The lower the currency that disinformation is seen to have, the less value it will have for perpetrators.

What, then, is in our counter-disinformation tool kit? Certainly we should not exert efforts to monitor all attempts at disinformation; rather, we should first aim to understand at a strategic level the informational intentions and, importantly, capabilities, of states such as Russia, China, Iran and North Korea. That is an intelligence mission. It’s one that can be conducted using open-source intelligence techniques, and a mission in which academia and the private sector could play important roles. Second, it’s important to name and shame as appropriate. If foreign diplomats are implicated in disinformation, the authorities should respond, including with rapid expulsion when warranted.

In the same vein, when domestic proxy actors can be identified, government entities should take action through either criminal sanctions or CSIS and Royal Canadian Mounted Police (RCMP) threat reduction measures, an example of the latter being when the RCMP deployed uniformed officers and marked police cruisers outside so-called “Chinese police stations” and effectively forced their closure. Third, the authorities should tell Canadians about disinformation threats consistently and regularly, with clarity and rigour in their use of terms. The government should take pains to draw targeted diaspora communities into the discussion: Listen to their concerns, draw on their experience. Help them achieve resilience especially by ensuring, as ACHK suggests, that they have multiple, independent and authoritative information sources available. Finally, keep disinformation threats in perspective. Don’t over-hype the threat. There are worse forms of foreign interference.

Espionage, cyberattacks, intellectual property theft and economic harms, intimidation and threats of violence, suppression of dissent, and the suborning of individuals are the most serious forms of foreign interference in our democracy. Why? Because they rob this country of secrets and data for the benefit of our adversaries. At an individual level they steal livelihoods, and basic freedoms, in the pursuit of a foreign state’s agenda.

But disinformation is a pickpocket, a nuisance to a robust democracy, nothing more. It can’t silence us. Nor should it shake us.

“Impossible to monitor and manage,” the words used by the national security and intelligence adviser to describe the tide of false information in our society, should not be read as defeatism. We just need the right perspective on the threat, the right understanding of what we are talking about, and the right tools with which to curb it.