In recent years, concerns over foreign interference from “bad actors” have increased, and in the wake of the 2016 US presidential election, governments around the world, social media companies and civil society alike have been on the lookout for such attempts to degrade the integrity of our elections or, more vaguely, to “sow discord.” From pseudonymous trolls and botnets to outrage-inducing, hyper-partisan content, it seems that week after week, there is news that online accounts are pushing narratives in the interest of Russia, Iran or China. The Global Engagement Center (GEC), a division of the US State Department, for example, has alleged that Russia is operating an “ecosystem” of humans and bots to amplify conspiracy theories related to COVID-19 in a bid to “sow discord and undermine U.S. institutions and alliances.” Senator Elizabeth Warren even released a detailed plan to fight disinformation as part of her presidential campaign, citing “foreign actors” as the main threat. Scholars and journalists are also on the hunt. Indeed, plenty of ink has been spilled on the ills of “weaponized social media” and the next generation of “active measures.”

However, despite all the fears of mass-targeted influence operations from foreign adversaries, it remains unclear whether they have much impact at all.

Evidence and analysis of activity from the Russian-based Internet Research Agency (IRA) continue to be debated. Although some suggest that it was plausible the IRA influenced public opinion, there is very little evidence of direct impact on the US 2016 presidential election. The bulk of their activity was engaged in audience building, and when compared to the massive volumes of media consumed by the average American across mainstream, independent and social media, Russian-sponsored activities would have been but a drop in an otherwise chaotic and constantly churning sea of information. Attempts by China to influence the Taiwanese election were likewise ineffective, as incumbent and pro-democracy leader Tsai Ing-wen won a second term by a wide margin. Reporting on such influence operations, however, is often couched in wording that implies attribution and effect without actual verification or convincing evidence.

And, in an ironic twist, our fears and concerns that foreign actors are somehow interfering with democracy and deliberative discourse are, counterintuitively, allowing for the further erosion of democracy and deliberative discourse.

Of course, the threat of influence operations should not be taken lightly and warrants investigation and thoughtful study. Yet, the knee-jerk reactions to foreign influence campaigns from some policy makers and parts of civil society have exaggerated the impact, and therefore the threat, of foreign-targeted influence operations. And, in an ironic twist, our fears and concerns that foreign actors are somehow interfering with democracy and deliberative discourse are, counterintuitively, allowing for the further erosion of democracy and deliberative discourse.

First, the widespread use of the term “fake news” combined with concerns over national security (although often sincere and well-meaning) have given illiberal and authoritarian-leaning governments around the world top cover to enact a range of censorship-enabling measures that are then used to crack down on dissent, target political opponents and instill a culture of self-censorship. In the Philippines — where President Rodrigo Duterte continues to prosecute a “war on drugs” that has led to the deaths of thousands — penalties for spreading false and alarming information were included in a special measures bill to combat COVID-19. Critics of the bill warn that it will be selectively used to punish political opponents and deter dissent. Last year, Singapore — which has continually ranked poorly for press freedom — also passed a law targeting “fake news” and false information. Citing examples of foreign interference in the United States and the United Kingdom, Singapore justified the bill on the grounds of national security. Borrowing a common refrain from the West, the government stated that falsehoods “weaponised, to attack the infrastructure of fact, destroy trust and attack societies.” Now Nigeria, again in the name of national security, is mulling its own bill targeting false information, which has widely been mocked as a copy-paste of Singapore’s law

Second, the fear of foreign speech could exacerbate ongoing tensions between states in a way that will likely hurt civil society and press freedom. Although influence operations have little (if any) actual impact on a state’s national security, governments may use the fear of foreign speech to expel, control and surveil foreign journalists and civil society. Take China and the United States, for example. Both accuse one another of interfering in each other’s domestic affairs, citing influence operations and collusion that may be detrimental (although rarely articulated in specifics or evidence). As such, retaliatory measures have been carried out by both states through the expulsion of journalists and by forcing media workers to register personal information with government officials. Hua Chunying, a spokeswoman for the Chinese foreign ministry, defended the expulsion of journalists from The New York Times, The Wall Street Journal and The Washington Post, tweeting: “We reject ideological bias against China, reject fake news made in the name of press freedom, reject breaches of ethics in journalism.” And as Harvard Law School’s Evelyn Douek shows in her chapter for the forthcoming book, “Combating Election Interference: When Foreign Powers Target Democracies,“ the way social media companies and governments are moderating foreign content amounts to a “free speech blind spot,” due to their seemingly ad hoc and inconsistent enforcement. The ongoing rhetoric of fear surrounding foreign influence operations and espionage is now expanding to include foreign students and businesses. In addition to curtailing the flow of information and intellectual collaboration, such actions may also contribute to increasing xenophobia.

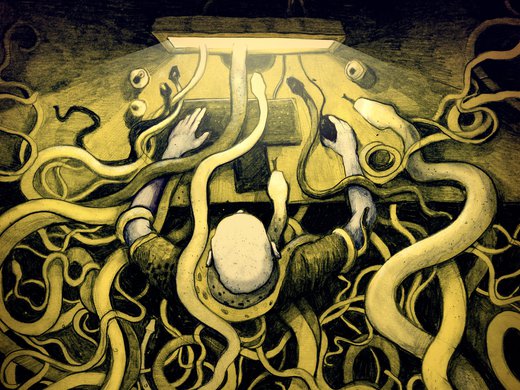

Third, an overemphasis on “bad actors” and their supply of disinformation diverts our attention from the material problems that drive our demand for and receptivity to dubious content of suspicious origin. In a commentary for the Harvard Kennedy School’s Misinformation Review, Alexei Abrahams and I argue that thus far the focus of the West’s countermeasures in the fight against misinformation and disinformation has relied on repressive strategies — root out the networks, shut down the accounts and remove the content. However, this strategy is, as many have pointed out already, a never-ending “game of whack-a-mole” that (at best) provides short-term tactical gains. These palliative measures must be coupled with long-term solutions that take aim at the reasons why people flock to fringe websites and dubious accounts for their news. It is not that we want to be lied to, but rather that our trust in the institutions and authorities to which we delegate our well-being and future has eroded. As such, redressive strategies should also be explored to regain and restore trust and legitimacy in our institutions, politicians and governing bodies. And, where possible, domestic policy should be directed at making democratic participation easier. “Bad actors” will always be around and try to mess with information systems, but we can choose to make voting easier, prevent gerrymandering, and amend or repeal laws that lead to voter suppression.

“Bad actors” will always be around and try to mess with information systems, but we can choose to make voting easier, prevent gerrymandering, and amend or repeal laws that lead to voter suppression.

Mass-targeted covert influence operations and disinformation campaigns are real. Analysis from studies show that they promote narratives that aim to provoke outrage, capitalize on social cleavages and, in some cases, push narratives in the interest of certain countries. However, evidence of activity is not evidence of impact. To be sure, we should be aware of such operations, bringing them to light and, when appropriate, removing them. However, if the free flow of ideas, freedom of expression and a better quality of democratic participation are the ultimate goals, relying on detection and deletion is not enough, and, as outlined above, the exaggeration of the threat of foreign influence operations may do more harm than good. Instead, we should invest in solutions that shore up trust and increase political participation, civil discourse and pluralism.

As a first step, the US Foreign Agents Registration Act, which risks being abused for political reasons, should be reformed, as Nick Robinson argues in Foreign Policy. Second, increasing transparency around campaign funding and online ad spending would also be helpful, specifically around “dark money” — where the identities of the donors are concealed. And lastly, states need to do the hard work of governance. In many ways the prevalence of false and misleading content is symptomatic of deeper issues. As Johan Farkas and Jannick Schou demonstrate in their book, Post-Truth, Fake News and Democracy, the decline in democracy has been ongoing for decades and is not because of social media: “There is a series of deep-seated problems facing liberal democracies, but the rise of fake news and alternative facts is not the biggest of our problems.” Instead of looking overseas for scapegoats, we should be looking at why trust in our own institutions and authorities has fallen, why civil discourse has devolved and how to better address the many social divisions that drive our receptivity for dubious content.