Even as artificial intelligence (AI) is forecast to exceed human capabilities across a range of industries, it’s also predicted to augment human labour. The consulting firm McKinsey, for example, lists some 400 use cases representing $6 trillion in value across 19 industries in which AI will transform the nature of work. What about government? As a recent paper from the Organisation for Economic Co-operation and Development concludes, “government use of AI trails that of the private sector.” Fortunately, this may be changing.

In the United States, AI-driven investments are being deployed in customer service, infrastructure planning, legal adjudication, citizen response systems, regulation (“regtech”), cybersecurity, fraud detection and national security. In Estonia, the government is developing AI components that underpin open-source government services through its “AI Govstack” platform. In fact, in more than 60 countries around the world, governments are now developing national AI strategies — most of them focused on public sector transformation.

Toward Algorithmic Government?

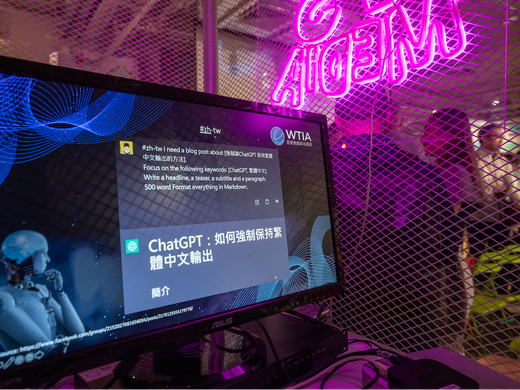

Given the proliferation of large language models such as ChatGPT, many governments now hope to “augment” their public service delivery systems using machine-learning applications. This includes real-time management of national infrastructure using the Internet of Things, automated compliance and regulation systems using“digital twins,” and support for citizen engagement using chatbots. AI could mean significantly improving public service delivery and elevating the work of public service professionals. Alternatively, AI could mean remaking government altogether.

Coining the term “algorithmic regulation,” Silicon Valley’s Tim O’Reilly has suggested that AI represents a tool for accelerating the performance and learning capacities of democratic governments. O’Reilly suggests that in using machine learning to increase transparency and optimize the allocation of resources, government regulations could be configured as algorithms or rule sets. Building on fresh data, algorithmic government would increase efficiency, reduce costs, and improve public services by automating decision making and optimizing resource allocation.

For many, the thought of governments leveraging AI in order to erect a “digital leviathan” engenders discomfort or even horror. Indeed, the idea of AI becoming an authoritarian monstrosity has a long pedigree in science fiction. In the 1999 movie The Matrix, advanced AI has colonized the Earth in order to exploit human beings, while in Iain M. Banks’s “Culture” novels, AI administrators known as “Minds” serve as benevolent caretakers overseeing a post-work society. Beyond science fiction, however, most contemporary proposals are simply focused on making AI transparent and accountable.

Digital Leviathan

The European Commission, for example, has proposed the Artificial Intelligence Act, which aims to create a legal framework for AI, while China has developed several AI-related regulations, including the Cybersecurity Law and provisions to protect personal data. But these efforts beg the question: What systems of government are necessary for managing AI-driven societies? And what is the potential for exploitation across a vast digital leviathan of algorithmic management and control?

The current scramble to regulate AI in the context of a rising data economy has awakened the world’s governments to the very real challenge that AI now poses. “Deep learning” alone represents a revolution in the use of AI in supporting decision management, forecasting, data classification and content generation. In fact, for many experts in the field, the recent acceleration in both the power and the scope of AI has raised fears that the technology is now advancing too quickly.

What’s clear is that as big data and machine learning continue to be deployed at scale, their capacity to amplify systems of command and control will necessarily demand new regulation. But what kind of regulation? If we understand AI as a series of statistical methods and practices that replicate human capabilities, then there is no single field to regulate. Like electricity, AI is a general-purpose technology that overlaps virtually every kind of product or service that uses computation to perform a task.

Augmenting Democracy

Regulating AI could mean developing new global governance bodies modelled on the International Atomic Energy Agency or the World Intellectual Property Organization. Or, it could mean something else entirely. Unlike nuclear energy or weapons of mass destruction, AI is not a specific technology. In fact, AI is more akin to a collection of tools than a particular resource or weapon. For this reason, regulating AI is probably less about erecting new institutions and more about crafting good laws and good design principles.

This principle of good design applies to the evolution of our institutions as well. As Beth Noveck, former head of President Barack Obama’s Open Government Initiative, explains, data-driven networks are not only accelerating the speed of innovation but also reshaping the speed of decision making. As we move beyond industrial-era bureaucracies characterized by closed systems of decision making, AI and big data will increasingly provoke the need for new tools in the evolution of democratic self-government. Put differently, even as AI systems become a new object of regulation, they will reshape the institutions and practices undergirding democracy itself.