ver the past three years, the debate about the role of digital technology in our society, our economy and our democracies has gone through a remarkable transformation. Following two decades of techno-optimism, whereby digital technology generally, and social media specifically, was viewed as broadly aligned with democratic good and so left to be governed in a laissez-faire environment, we are now in the midst of what could be called a “techlash.”

The recent catalyst for this turn was the 2016 US presidential election — a moment that saw the election of Donald J. Trump with the aid of a Russian government adept at leveraging the digital infrastructure to manipulate the American electorate. But the root cause for this turn runs far deeper. It is the result of a notably laissez-faire policy approach that has allowed our public sphere to be privatized, embedding in our digital ecosystem the incentive structures of markets while allowing the social, economic and democratic costs of an unfettered system to be externalized, and therefore borne by the public.

Ultimately, the platform web is made up of privately owned public spaces, largely governed by the commercial incentives of private actors, rather than the collective good of the broader society. Platforms are more like shopping malls than town squares — public to an extent, but ultimately managed according to private interests. Once nimble start-ups, Google, Facebook, Twitter and Amazon now serve billions of users around the world and increasingly perform core functions in our society. For many users, particularly those in emerging economies, these companies are the primary filtering point for information on the internet (Cuthbertson 2017). The private gains are clear to see — Google, Facebook and Amazon are among the most profitable companies in history. But in spite of myriad benefits offered by platforms, the costs are clear as well.

Platforms are more like shopping malls than town squares — public to an extent, but ultimately managed according to private interests.

The social costs of the platform economy are manifesting themselves in the increasingly toxic nature of the digital public sphere, the amplification of misinformation and disinformation, the declining reliability of information, heightened polarization and the broad mental health repercussions of technologies designed around addictive models.

The economic costs are grounded in the market distortion created by increased monopolistic behaviour. The vast scale of the digital platform economy not only affords near-unassailable competitive advantages, but also invites abuses of monopoly power in ways that raise barriers to market entry (Wu 2018). Moreover, the ubiquity of the platform companies in the consumer marketplace creates special vulnerabilities because of the amount of control they wield over data, advertising and the curation of information.

The costs to our democracy are grounded not only in the decline of reliable information needed for citizens to be informed actors in the democratic process and the undermining of public democratic institutions, but in threats to the integrity of the electoral system itself.

As we collectively learn more about the nature of these problems, in all their complexity and nuance, this moment will demand a coordinated and comprehensive response from governments, civil society and the private sector. Yet, while there is growing recognition of the problem, there remains significant ambiguity and uncertainty about the nature and scale of the appropriate response.

The policy response is riddled with challenges. Since the digital economy touches so many aspects of our lives and our economies, the issues that fall under this policy rubric are necessarily broad. In countries around the world, data privacy, competition policy, hate speech enforcement, digital literacy, media policy and governance of artificial intelligence (AI) all sit in this space. What’s more, they are often governed by different precedents, regulated by siloed departmental responsibility, and lack coordinated policy capacity. This confusion has contributed to a policy inertia and increased the likelihood that governments fall back on self-regulatory options.

And so democratic governments around the world have begun to search for a new strategy to govern the digital public sphere. Looking for an overarching framework, many are converging on what might be called a platform governance agenda.

The value of a platform governance approach is that first, it provides a framework through which to connect a wide range of social, economic and democratic harms; second, it brings together siloed public policy areas and issues into a comprehensive governance agenda; and third, it provides a framework for countries to learn from and coordinate with each other in order to exert sufficient market pressure.

The challenges we confront are systemic, built into the architecture of digital media markets.

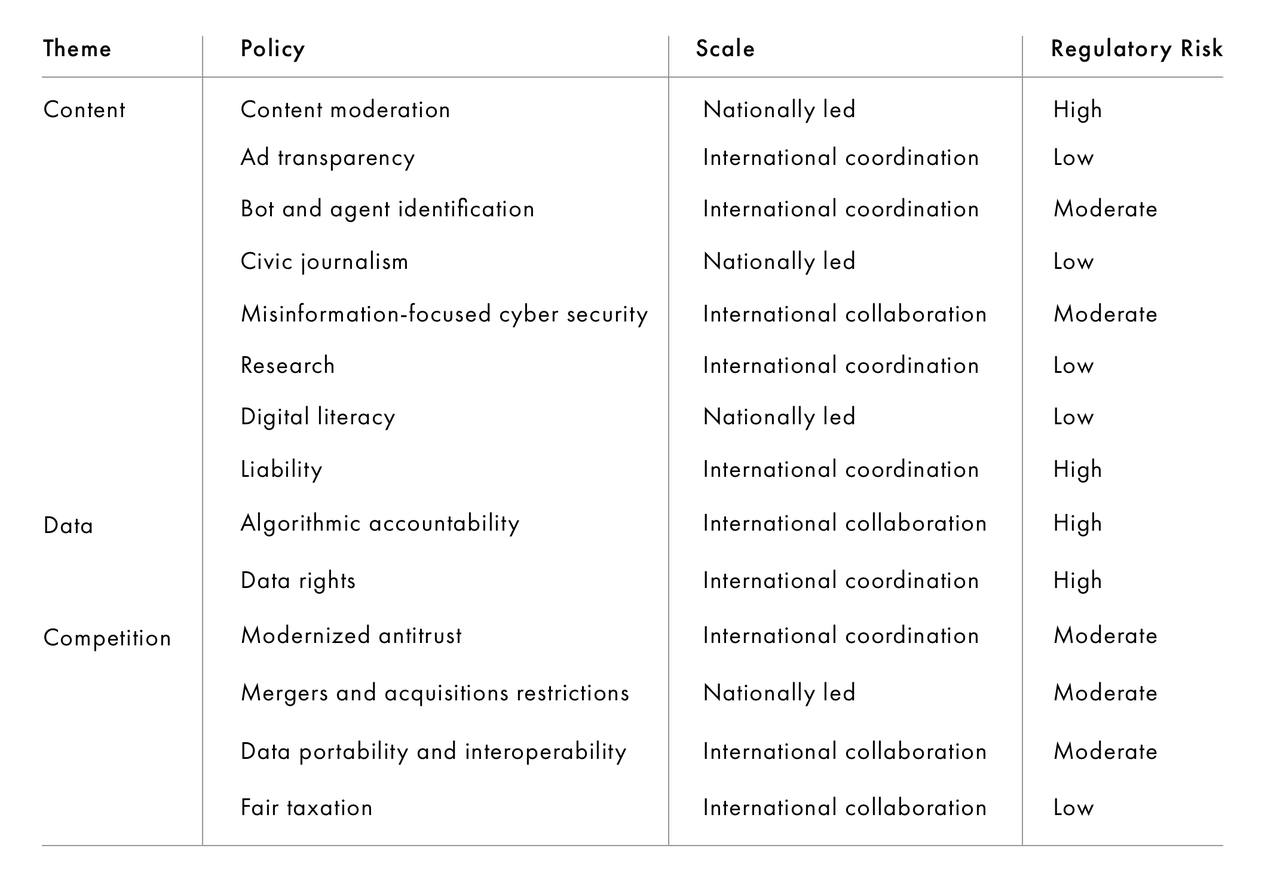

But what might a platform governance agenda look like? There are three dimensions to consider in answering this question — policy coordination, scale of appropriate response and degree of regulatory risk.

First, there are no single-issue solutions to the challenges of technology and society. In order to address the breadth of policy areas in this space, we need a combination of content, data and competition policies that are implemented in coordination across government and between governments. This will demand a coordinated “whole-of-government” effort to bring together a wide range of policies. The challenges we confront are systemic, built into the architecture of digital media markets, therefore public policy response must be holistic and avoid reactions that solve for one aspect of the problem while ignoring the rest.

Second, within the platform governance agenda there is a need for multiple scales of responses for different policy issues: national implantation; international coordination; and international collaboration. As this essay series suggests, there is an urgent need for global platform governance, as no single state can shift the structure of the platform economy alone. Platforms are global organizations, which, in the absence of enforced national rules, will default to their own terms of service and business practices. At the same time, because of the scale of the operation of these companies and the power they have accrued as a result, as well as the complexity of the new governance challenges they present, it is very difficult for any individual country to go it alone on regulation. However, this need for global governance is complicated by a parallel need for subsidiarity in policy responses. On some issues, such as speech regulation, policy must be nationally implemented. In these cases, countries can learn from and iterate off each other’s policy experimentation. On others, such as ad-targeting laws, international coordination is necessary, so that countries can exert collective market power and align incentives. On others, such as AI standards, international collaboration is needed to ensure uniform application and enforcement and to overcome collective action problems.

Third, the issues that fall under the platform governance agenda are of varying levels of complexity and regulatory risk. Some policies have a high degree of consensus and limited risk in implementation. The online ad micro-targeting market could be made radically more transparent, and in some cases could be suspended entirely. Data privacy regimes could be updated to provide far greater rights to individuals and greater oversight and regulatory power to punish abuses. Tax policy could be modernized to better reflect the consumption of digital goods and to crack down on tax-base erosion and profit shifting. Modernized competition policy could be used to restrict and roll back acquisitions and to separate platform ownership from application or product development. Civic media could be supported as a public good. And large-scale and long-term civic literacy and critical-thinking efforts could be funded at scale by national governments. That few of these have been implemented is a problem of political will, not policy or technical complexity. Other issues, however, such as content moderation, liability and AI governance, are far more complex and are going to need substantive policy innovation.

The categorization of these three variables in Table 1 is not intended to be definitive. Many of these issues overlap categories, and the list of policies is certainly not exhaustive. But it may serve as a typology for how this broad agenda can be conceptualized.1

Table 1: Variables Affecting Platform Governance

Just as we needed (and developed) new rules for the post-war industrial economy, we now need a new set of rules for the digital economy. Instead of rules to govern financial markets, monetary policy, capital flow, economic development and conflict prevention, we now need rules to regulate data, competition and content — the intangible assets on which most of the developed economy, and increasingly the health of our societies, now depend. This is the global governance gap of our time.

As this model evolves, there will be a need for other countries to not only collaborate on implementation, but also coordinate responses and iterate policy ideas. This work will invariably occur through state organizations such as the Group of Seven, the Group of Twenty, the Organisation for Economic Co-operation and Development, and the United Nations. But the situation will also demand new institutions to bring together the state and non-state actors needed to solve these challenging policy problems. One promising place for this policy coordination is the International Grand Committee on Big Data, Privacy and Democracy (IGC), which has evolved to use platform governance as its overarching frame. As a self-selected group of parliamentarians concerned with issues of platform governance, the IGC has the opportunity to be a catalyzing international institution for the design and coordination of a platform governance agenda.

And that is why CIGI has convened this essay series, and why we will bring a network of global scholars to Dublin in November 2019 to support this nascent international institution. Whatever the IGC’s role ahead within this emerging realm of governance, we hope this conversation sparks a much-needed global governance process.