n Saturday, August 3, 2019, a gunman opened fire in a Walmart in El Paso, Texas, killing 22 people and wounding 27 before he was taken into custody by police. As news of the attack spread, so did a white supremacist manifesto, allegedly written by the shooter and uploaded hours before the shooting to an anonymous forum, 8chan (Harwell 2019b). This document was archived and reproduced, amplified on other message boards and social media, and eventually reported in the press. This was the third mass shooting linked to the extremist haven 8chan in six months, and followed the same pattern as the synagogue shooting in Poway, California, in April and the Christchurch, New Zealand, mosque shootings in March: post a racist screed to 8chan; attack a targeted population; and influence national debates about race and nation.

What will it take to break this circuit, where white supremacists see that violence is rewarded with amplification and infamy? While the answer is not straightforward, there are technical and ethical actions available.

After the white supremacist car attack in Charlottesville, Virginia, in 2017, platform companies such as Facebook, Twitter and YouTube began to awaken to the fact that platforms are more than just a reservoir of content. Platforms are part of the battleground over hearts and minds, and they must coordinate to stop the amplification of white supremacy across their various services. Advocacy groups, such as Change the Terms,1 are guiding platform companies to standardize and enforce content moderation policies about hate speech.

But, what happens to content not originating on major platforms? How should websites with extremists and white supremacist content be held to account, at the same time that social media platforms are weaponized to amplify hateful content?

Following the El Paso attack, the original founder of 8chan has repeatedly stated that he believed the site should be shut down (quoted in Harwell 2019a), but he is no longer the owner. The long-term failure to moderate 8chan led to a culture of encouragement of mass violence, harassment and other depraved behaviour. Coupled with a deep commitment to anonymity, the current owner of 8chan resists moderation on principle, and back tracing content to original posters is nearly impossible. The more heinous the content, the more it circulates. 8chan, among other extremist websites, also contributed to the organization of the Unite the Right Rally in Charlottesville, where Heather Heyer was murdered in the 2017 car attack and where many others were injured.

In the wake of Charlottesville, corporations grappled with the role they played in supporting white supremacists organizing online (Robertson 2017). After the attack in Charlottesville and another later in Pittsburgh in October 2018, in which a gunman opened fire on the Tree of Life synagogue, there was a wave of deplatforming and corporate denial of service (Koebler 2018; Lorenz 2018), spanning cloud service companies (Liptak 2018), domain registrars (Romano 2017), app stores (O’Connor 2017) and payment servicers (Terdiman 2017). While some debate the cause and consequences of deplatforming specific far-right individuals on social media platforms, we need to know more about how to remove and limit the spread of extremist and white supremacist websites (Nouri, Lorenzo-Dus and Watkin 2019).

Researchers also want to understand the responsibility of technology corporations that act as the infrastructure allowing extremists to connect to one another and to incite violence. Corporate decision making is now serving as large-scale content moderation in times of crisis, but is corporate denial of service a sustainable way to mitigate white supremacists organizing online?

On August 5, 2019, one day after two mass shootings rocked the nation, Cloudflare, a content delivery network, announced a termination of service for 8chan via a blog post written by CEO Matthew Prince (2019). The decision came after years of pressure from activists. “Cloudflare is not a government,” writes Prince, stating that his company’s success in the space “does not give us the political legitimacy to make determinations on what content is good and bad” (ibid.). Yet, due to insufficient research and policy about moderating the unmoderatable and the spreading of extremist ideology, we are left with open questions about where content moderation should occur online.

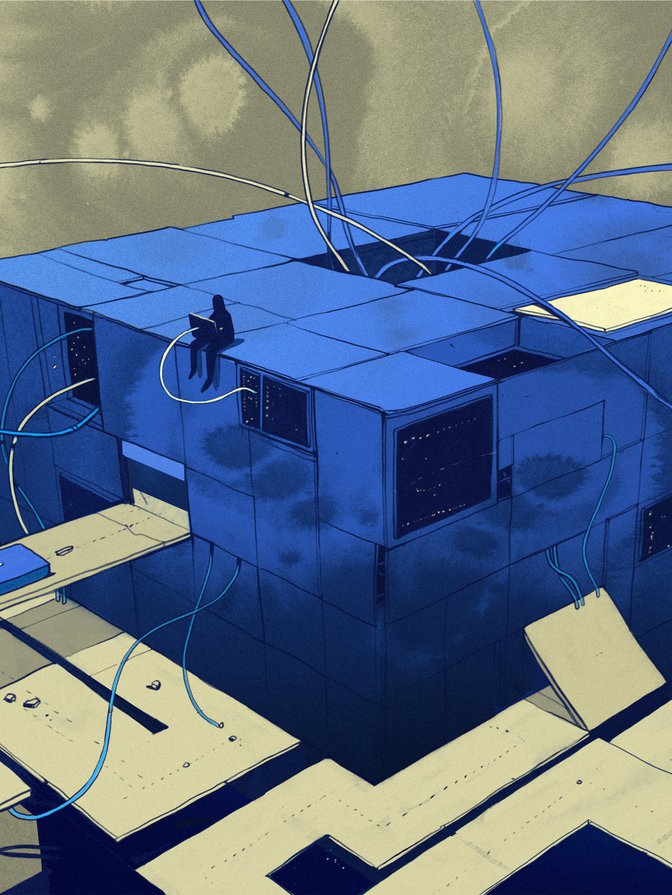

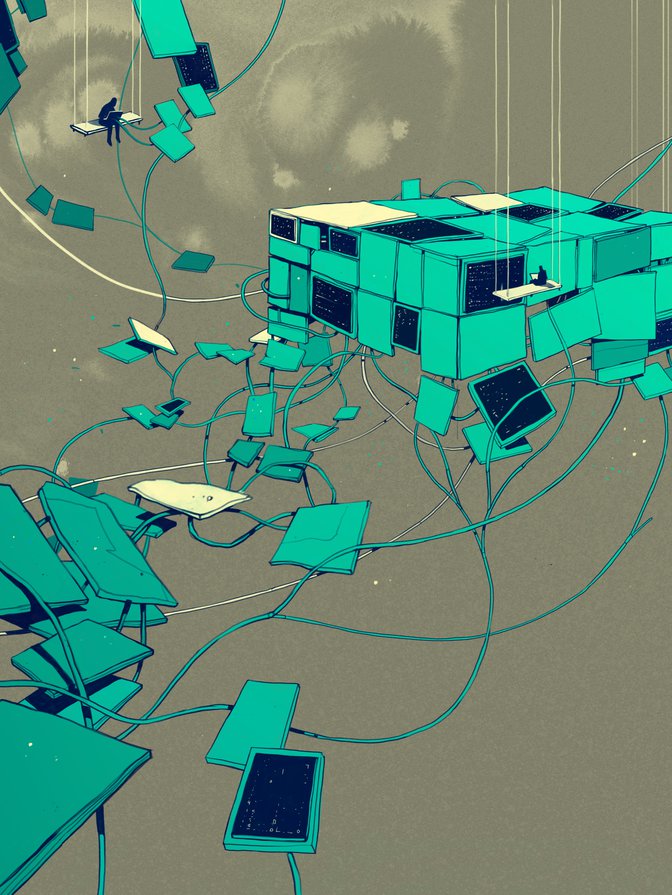

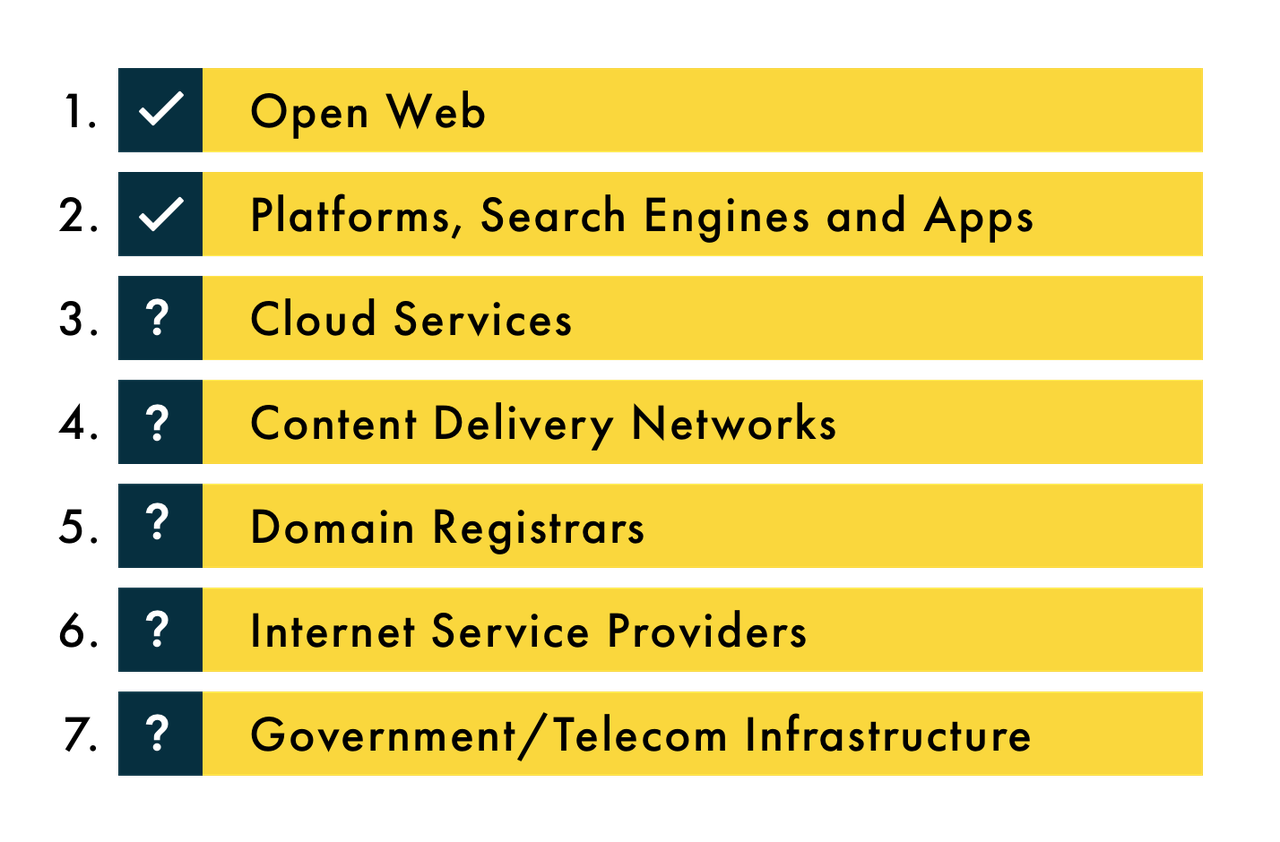

Figure 1: Content Moderation in the Tech Stack

When discussions of content moderation take a turn for the technical, we tend to hear a lot of jargon about “the tech stack” (Figure 1). It is important to understand how the design of technology also shows us where the power lies.

Most debates about content moderation revolve around individual websites’ policies for appropriate participation (level 1) and about major platforms’ terms of service (level 2). For example, on level 1, a message board dedicated to hobbies or the user’s favourite TV show may have a policy against spamming ads or bringing up political topics. If users don’t follow the rules, they might get a warning or have their account banned.

On level 2, there is a lot of debate about how major corporations shape the availability and discoverability of information. While platforms, search engines and apps have policies against harassment, hate and incitement to violence, it is difficult to enforce these policies given the enormous scale of user-generated content. In Sarah Roberts’s new book Behind the Screen: Content Moderation in the Shadows of Social Media (2019), she documents how this new labour force is tasked with removing horrendous violence and pornography daily, while being undervalued despite their key roles working behind the scenes at the major technology corporations. Because of the commercial content moderation carried out by these workers, 8chan and other extremist sites cannot depend on social media to distribute their wares.

For cloud service providers on level 3, content moderation occurs in cases where sites are hosting stolen or illegal content. Websites with fraught content, such as 8chan, will often mask or hide the location of their servers to avoid losing hosts. Nevertheless, actions by cloud service companies post-Charlottesville did destabilize the ability for the so-called alt-right to regroup quickly (Donovan, Lewis and Friedberg 2019).

On level 4 of the tech stack, content delivery networks (CDNs) help match user requests with local servers to reduce network strain and speed up websites. CDNs additionally provide protection from malicious access attempts, such as distributed denial-of-service attacks that overwhelm a server with fake traffic. Without the protection of CDNs such as Cloudflare or Microsoft’s Azure, websites are vulnerable to political or profit-driven attacks, such as a 2018 attempt to overwhelm Github (Kottler 2018) or a 2016 incident against several US banks (Volz and Finkle 2016). Cloudflare, the CDN supporting 8chan, responded by refusing to continue service to 8chan. Despite attempts to come back online, 8chan has not been able to find a new CDN at this time (Coldewey 2019).

It is difficult to enforce these policies given the enormous scale of user-generated content.

In the aftermath of Charlottesville, Google froze the domain of a neo-Nazi site that organized the event and GoDaddy also refused services (Belvedere 2017). In response to the El Paso attack, another company is taking action. Tucows, the domain registrar of 8chan, has severed ties with the website (Togoh 2019). It is rare to see content decisions on level 5 of the tech stack, except in the cases of trademark infringement, blacklisting by a malware firm or government order.

Generally speaking, cloud services, CDNs and domain registrars are considered the backbone of the internet, and sites on the open web rely on their stability, both as infrastructure and as politically neutral services.

Level 6 is a different story. Internet service providers (ISPs) allow access to the open web and platforms, but these companies are in constant litigious relations with consumers and the state. ISPs have been seen to selectively control access and throttle bandwidth to content profitable for them, as seen in the ongoing net neutrality fight. While the divide between corporations that provide the infrastructure for our communication systems and the Federal Communications Commission is overwhelmed by lobbying (West 2017), the US federal and local governments remain unequipped to handle white supremacist violence (Andone and Johnston 2017), democratic threats from abroad (Nadler, Crain and Donovan 2018), the regulation of tech giants (Lohr, Isaac and Popper 2019) or the spread of ransomware attacks in cities around the country (Newman 2019). However, while most ISPs do block piracy websites, at this stage we have not seen US ISPs take down or block access to extremist or white supremacist content. Other countries, for example, Germany, are a different case entirely as they do not allow hate speech or the sale of white supremacist paraphernalia (Frosch, Elinson and Gurman 2019).

What is allowing Facebook to be... Facebook?

— CIGI (@CIGIonline) November 5, 2019

Social media platforms are part of a larger online media ecosystem, explains @BostonJoan. #techstack pic.twitter.com/uIzJjkR4VF

Lastly, on level 7, some governments have blacklisted websites and ordered domain registrars to remove them (Liptak 2008). Institutions and businesses can block access to websites based on content. For example, a library will block all manner of websites for reasons of safety and security. In the case of 8chan, while US President Trump has called for law enforcement to work with companies to “red flag” posts and accounts, predictive policing has major drawbacks (Munn 2018).

At every level of the tech stack, corporations are placed in positions to make value judgments regarding the legitimacy of content, including who should have access, and when and how. In the case of 8chan and the rash of premeditated violence, it is not enough to wait for a service provider, such as Cloudflare, to determine when a line is crossed. Unfortunately, in this moment, a corporate denial of service is the only option for dismantling extremist and white supremacist communication infrastructure.

The wave of violence has shown technology companies that communication and coordination flow in tandem. Now that technology corporations are implicated in acts of massive violence by providing and protecting forums for hate speech, CEOs are called to stand on their ethical principles, not just their terms of service. For those concerned about the abusability of their products, now is the time for definitive action (Soltani 2019). As Malkia Cyril (2017) of Media Justice, argues, “The open internet is democracy’s antidote to authoritarianism.” It’s not simply that corporations can turn their back on the communities caught in the crosshairs of their technology. Beyond reacting to white supremacist violence, corporations need to incorporate the concerns of targeted communities and design technology that produces the Web we want.

Regulation to curb hateful content online cannot begin and end with platform governance. Platforms are part of a larger online media ecosystem, in which the biggest platforms not only contribute to the spread of hateful content, but are themselves an important vector of attack, increasingly so as white supremacists weaponize platforms to distribute racist manifestos. It is imperative that corporate policies be consistent with regulation on hate speech across many countries. Otherwise, corporate governance will continue to be not merely haphazard but potentially endangering for those who are advocating for the removal of hateful content online. In effect, defaulting to the regulation of the country with the most stringent laws on hate speech, such as Germany, is the best pathway forward for content moderation, until such time that a global governance strategy is in place.

Acknowledgements

Brian Friedberg, Ariel Herbert-Voss, Nicole Leaver and Vanessa Rhinesmith contributed to the background research for this piece.